Distributed Transaction

A database transaction is a set of operations to be performed on data as a single logical unit of work. It must either be fully completed or have no effect at all. If a transaction cannot be completed in full, the system will roll it back.

A distributed transaction is a transaction within a distributed environment. It is typically coordinated across nodes connected by a network. Distributed transactions require that operations across all participating nodes must either all succeed or all roll back together.

A distributed database must ensure data consistency across all nodes. DolphinDB implements distributed transactions through Two-Phase Commit (2PC) and Multi-Version Concurrency Control (MVCC) mechanisms. This ensures that each transaction maintains the ACID properties: atomicity, consistency, isolation, and durability.

- Atomicity

A transaction is an atomic operation unit that ensures data writes are either fully completed or not executed at all. For example, when writing data across multiple partitions, the transaction ensures that either all partitions are updated successfully or none are changed. Similarly, if each partition has multiple replicas, the transaction guarantees that either all replicas are updated successfully or none of them are changed.

- Consistency

A transaction can involve write operations on different partitions. Whether the transaction is successfully committed or rolled back, the state of each replica across all partitions remains consistent. In cluster mode, DolphinDB also ensures that the data queried on any node at any time is consistent.

- Isolation

DolphinDB implements transaction isolation through the MVCC mechanism. When replica data is modified or removed, the system creates a new version while preserving the previous version. If the transaction succeeds, the previous version is overwritten; if not, the system reverts to the previous version. This approach allows multiple users to simultaneously read and/or write to the database without conflicts, as each transaction works with its own snapshot of the data.

- Durability

After a transaction is completed, its operation on the data is permanent.

Distributed Transaction Implementation

In traditional single-machine applications, all operations are performed locally, and transaction management is relatively simple. However, in distributed systems, implementing transactions requires considering factors such as data partitioning, network latency, and node failures. To ensure data integrity and high availability in distributed systems, specialized transaction management mechanisms—such as transaction commit protocols, transaction logging, and read-write/write-write separation—must be designed.

Transaction Commit Process Overview

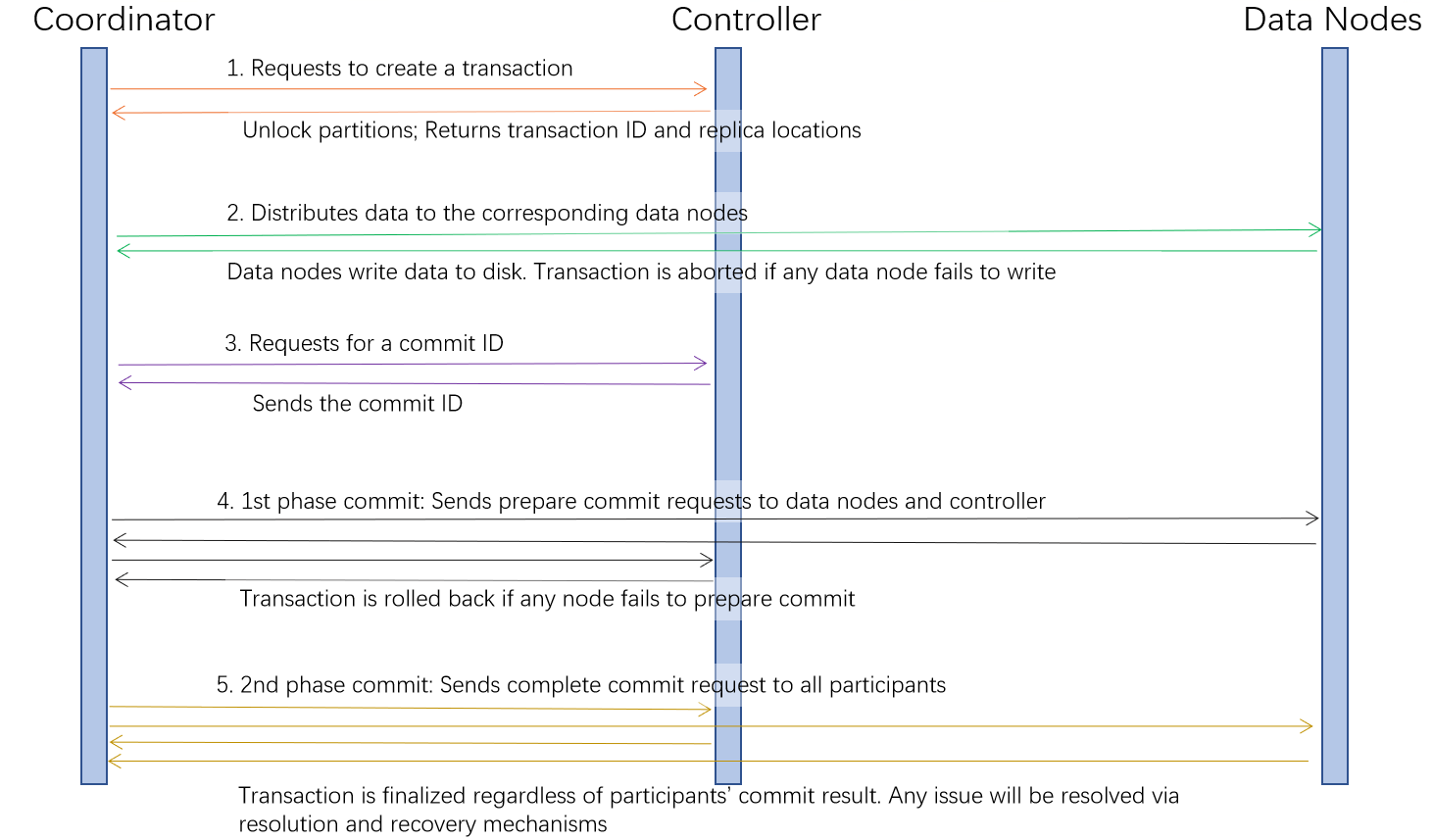

DolphinDB employs a two-phase commit (2PC) mechanism. The process of each transaction is coordinated by the initiating node (known as the coordinator).

- Transaction Creation: The coordinator requests the controller to create a transaction and obtains a transaction ID (tid) along with the locations (chunkId) of the replicas of the involved partitions.

- Data Distribution: The coordinator distributes data to the

corresponding data nodes which then write the data to disk* and report back.

If any data node fails to write, the transaction is aborted and rolled

back.

Note: The data writing mechanism depends on whether the redo log and cache engine are enabled. For details, refer to the next section, "Redo Log and Cache Engine".

- Commit ID Acquisition: Once all data nodes complete the write operation, the coordinator obtains a commit ID (cid) from the controller.

- First-Phase Commit: The coordinator sends prepare requests to all participating data nodes which then vote on whether they are ready to commit the transaction. Once the coordinator has collected all votes, it sends the prepare request to the controller. If all participating nodes succeed in this phase, the controller sets the transaction status to COMMITTED. If any node fails in this phase, the controller sets the transaction status to ROLLBACK, triggering an abort and rollback procedure.

- Second-Phase Commit: The coordinator receives the first-phase commit result from the controller and notifies all data nodes whether to abort or proceed with the transaction. If proceeding, the coordinator sends the second-phase commit request to both the data nodes and the controller, requesting them to finally commit changes. If successful, the transaction status is updated to COMPLETE.

At this stage, the transaction is considered finalized and all involved partitions are unlocked. In cases where a data node or the controller fails during the second-phase commit, the transaction’s final status is determined through the transaction resolution mechanism. For details, see the upcoming section, "Transaction Resolution".

Redo Log and Cache Engine

DolphinDB leverages the redo log and cache engine to ensure transaction durability and improve write performance.

The concept of redo log in DolphinDB is similar to write-ahead logging (WAL) which is a family of techniques for providing atomicity and durability in relational database systems. In a nutshell, the core of the redo log is that changes to database files must be written only after persisting all log records describing the changes. The redo log is a crucial mechanism for ensuring transaction durability, as it guarantees data consistency even in the event of a system crash.

The cache engine is a data write caching mechanism. Data is written to both the cache engine and the redo log simultaneously. Once the data in the cache engine reaches a predefined sizeor time threshold , it is asynchronously written in bulk to the database files. When a transaction spans multiple partitions and involves a small volume of data, writing to disk immediately after each transaction can reduce write efficiency. Instead, by using the cache engine to temporarily store these transactions and writing them to disk in bulk once a threshold is reached, better write performance and a higher compression ratio can be achieved. Additionally, batch sequential writes help improve I/O throughput, thereby enhancing overall system performance.

In addition to automatic disk writes triggered by reaching a threshold, you can also initiate a cache engine disk write using built-in functions. After the transaction-related data is written to disk, cache in the cache engine is garbage collected. Then the corresponding entries in the redo log can be removed. Redo log files are automatically cleared when they reach a predefined size or time threshold. For details on redo log and cache engine, see Redo Log and Cache Engine.

Transaction Resolution

If any issue arises during the second phase of 2PC process, the system initiates a resolution procedure to definitively determine whether the transaction should be committed or rolled back.

Since the controller is the last to vote in the first phase, its state conclusively determines the transaction's final outcome. The resolution process therefore relies solely on the information on the controller. The system doesn’t need to gather information from all the participating nodes via RPC. This streamlined design prevents delays that could arise if any participating node fails to recover, ensuring the resolution process remains efficient (particularly crucial when it’s the controller that initiates the resolution, as the partitions cannot accept writes during this time). It also minimizes the risk of incorrect transaction outcomes due to incomplete information collection.

When a node checks its logs or performs garbage collection and discovers a transaction marked as "COMMITTED" (which indicates that the transaction’s first-phase commit is complete but the second is not), it automatically initiates a resolution request to the controller. The subsequent process is as follows:

- Handling Resolution Requests: Upon receiving a resolution request, the controller first checks the resolution result cache. If a resolution result for this transaction already exists, the result is returned directly. Otherwise, the following two steps are performed:

- Checking Transaction Status:

- If the request comes from the controller itself, the transaction status COMMITTED is returned directly.

- If the request comes from a data node, the controller checks its own status for this transaction. If a result is found, it is returned. If no result is found, return ROLLBACK.

- Finalizing Resolution Result: The resolution result is determined

based on the transaction status returned from the previous step. Possible

status values include UNCOMMITTED, COMMITTED, ROLLBACK, and COMPLETE.

- UNCOMMITTED: Indicates that the first commit phase of the transaction is not yet finished on the controller. The final status cannot be determined yet, and the resolution must be postponed. The current resolution request is placed in a queue to wait for a future resolution attempt.

- COMMITTED: Indicates that the first commit phase of the transaction has been successfully finished on the controller. The system then updates the transaction status to COMPLETE, and this status is broadcast to all participants.

- ROLLBACK or COMPLETE: The corresponding result is broadcast directly to all participants.

Concurrency Management

When multiple independent transactions are executed simultaneously without a predefined sequential order, they are considered concurrent transactions. Reads and writes to the same partition by multiple concurrent transactions can lead to conflicts. This section explains DolphinDB's concurrency control mechanisms for handling write-write and read-write conflicts.

Concurrent Write-Write Operations

When multiple clients concurrently write to the same partition, write conflicts can occur. DolphinDB manages these conflicts through the atomic parameter set during database creation, offering two modes:

- TRANS mode: Write operations are terminated upon detecting a conflict, ensuring transaction atomicity. Users themselves must ensure that concurrent writes to the same partition are prevented.

- CHUNK mode: The system automatically handles conflicts and retries writes, but splits a single write operation into multiple transactions, which cannot guarantee overall atomicity.

Regardless of the mode chosen, DolphinDB ensures that only one transaction can write to a partition at any given time. Here's a detailed explanation of both modes' implementation processes.

When atomic is set to "TRANS", the write process is as follows:

- The client sends a write request.

- The data node receives the request and identifies the target partitions.

- The data node requests a transaction ID (tid) from the controller.

- Using the tid, the data node requests distributed locks for all target

partitions from the controller:

- If all locks are successfully acquired, proceed to step 5.

- If any lock acquisition fails, the system aborts the write operation, returning an error message to the client.

- The system writes data to the partitions. Then it releases the locks after successful write, returning a success message to the client.

When atomic is set to "CHUNK", the write process is as follows:

- The client sends a write request.

- The data node receives the request and identifies the target partitions.

- The data node requests a transaction ID (tid) from the controller.

- Using the tid, the data node requests distributed locks for all target

partitions from the controller.

- If at least one partition lock is successfully acquired, proceed to step 5;

- If all lock acquisitions fail, proceed to step 6.

- Write data to the partitions where locks were successfully acquired, then

release these locks.

- If there are remaining unwritten partitions, return to step 3 to obtain a new tid.

- If all partitions have been written to, return success to client.

- Wait for a short period before returning to step 3 to obtain a new tid and retry writing. If retry count reaches the limit (250 attempts), return write failure to client.

Through this approach, CHUNK mode can automatically handle write conflicts, but in case of system failures, some data may be partially written while other data remains unwritten.

Concurrent Read-Write Operations

DolphinDB's distributed transactions implement snapshot isolation for read and write operations through its two-phase commit process and multi-version concurrency control (MVCC) mechanism. MVCC maintains multiple transaction snapshots for each chunk (which are partition replicas on data nodes). These chunks can exist in multiple versions along a version chain. Whenever a write, update, or delete operation occurs, the system creates a new version by incrementing the chunk's version number. This allows read operations to access older versions while write operations to create new ones, effectively isolating concurrent read and write transactions from each other. To prevent unlimited growth, the system regularly cleans up older versions through garbage collection.

Here's a detailed explanation of DolphinDB’s read-write isolation mechanism:

- Transaction Initialization: The coordinator initiates a transaction by requesting a transaction ID from the controller.

- Commit and Chunk Versioning: Before starting the first-phase commit, the controller generates a globally incrementing cid (commit ID) and records it on all participating data nodes. The data nodes use MVCC to update chunks in new versions. Up to five previous versions are maintained on a data node, with regular cleanups based on retention settings.

- Snapshot ID (sid) Generation: Once the second phase of the commit completes (transaction marked as COMPLETE on controller), the controller generates a globally incrementing sid (snapshot ID) for the affected partition and records it in the version chain.

- Querying the Latest Metadata: When the coordinator needs the latest chunk metadata for read operations, the controller finds the highest sid in the version chain and maps it to the corresponding latest cid, identifying the most recent readable version.

- Data Retrieval: Using the returned cid, the coordinator reads the correct version of the chunk on the relevant data node.

Multi-Replica Writing Management

DolphinDB supports a multi-replica mechanism, enabling partition data to be stored in multiple replicas across different data nodes. This section takes a closer look at how data are written to multiple replicas during the transaction process.

- When a transaction begins, the coordinator requests information of all target partitions from the controller.

- The controller gathers the metadata of the target partitions and the status of available replicas. If a new partition is to be created, the controller initializes the partition and selects one or more data nodes (based on the dfsReplicationFactor configuration) to store the replica.

- The controller then provides the coordinator with the metadata and the information of available replicas. Using this information, the coordinator initiates transaction operations for each replica through RPC.

- Once the 2PC commit process of transactions for all replicas are successfully completed, the controller updates the version numbers for each involved replica. However, if any participating node fails during the transaction (e.g., due to a crash), the transaction is aborted and rolled back.

Maintaining Data Consistency Across Replicas

Data nodes periodically send heartbeat signals to the controller. If a data node goes offline unexpectedly, the controller marks it as unavailable. After the offline node restarts, it reports its replica information (including version numbers) to the controller. The controller compares the reported version numbers with its own metadata. If any replicas are found to have outdated versions, the controller triggers an automatic online recovery process to ensure consistency among replicas. For more details on the online recovery mechanism, refer to Online Recovery.

Transaction High Availability

DolphinDB’s distributed transactions are designed with high availability in mind, ensuring that transactions can still progress smoothly even with node failures. The following sections outline how DolphinDB achieves transaction high availability, based on the type of node involved.

During Controller Failures

DolphinDB ensures controller availability through the Raft algorithm, which allows the system to tolerate failures of fewer than half of the controllers. As long as the majority of controllers remain operational, the Raft algorithm synchronizes transaction status across them.

Even in the event of a leader node failure, the system can perform a leader election, and the new leader will have access to ongoing transaction information. The two-phase commit coordinator can resume communication with the new leader to continue the transaction process. If a transaction resolution is required, the new leader can immediately provide the decision, ensuring uninterrupted operations.

During Data Node Failures

In cases of data node failures, the remaining data nodes can still determine the final outcome of a transaction using the two-phase commit protocol. The failure of a single data node does not disrupt the transaction process.

Once the failed node recovers, it can request the transaction's final decision from the controller to catch up on its state. If any of its replica data is outdated, the system’s recovery mechanism will synchronize the data to ensure consistency.

SQL Operations and Functions Supporting Transactions

Distributed transactions are supported for write, update, and delete operations on DFS tables. This section outlines the relevant statements and functions.

DDL (Data Definition Language): create (database/table), alter, drop.

DML (Data Manipulation Language): insert into, update, delete.

Table Join Statements: Table joiners.

Functions for Distributed Database/Table Operations: addColumn, addRangePartitions, addValuePartitions, createdimensiontable, createPartitionedTable, database, dropColumns!, dropDatabase, dropPartition, dropTable, rename!, renameTable, reorderColumns!, replaceColumn!, tableInsert, tableUpsert, truncate, upsert!.

Backup and Restore Functions: backup, backupDB, backupTable, restore, restoreDB, restoreTable.