Inter-node Communication Protocols

DolphinDB supports two different methods of inter-node communication in a cluster: the traditional TCP/IP protocol (Transmission Control Protocol/Internet Protocol) and the more efficient RDMA (Remote Direct Memory Access) technology.

TCP/IP

The TCP/IP protocol is a widely-used network communication model. It works across various hardware and operating systems, supporting heterogeneous network environments.

In DolphinDB, cluster nodes communicate internally by establishing TCP/IP connections using specified IP addresses and port numbers. The PROTOCOL_DDB protocol is used for serialization and deserialization of all DolphinDB data forms and types. Communication errors, such as data loss or connection interruptions, will trigger error handling mechanisms. In these cases, nodes may retransmit data or re-establish connections to maintain communication. When the exchange is finally complete, the connection closes and system resources are released.

During cluster communication,

- The client (request-initiating node) uses a single thread with WSAEventSelect (on Windows) or epoll (on Linux) to handle events. Data transmission and task execution are handled by separate threads, with the number of threads controlled by the remoteExecutors parameter.

- Similarly, the server (request-handling node) also uses a single WSAEventSelect/epoll thread for event handling. The number of threads for data transmission and task execution is controlled by the workerNum configuration parameter.

DolphinDB's TCP/IP implementation encapsulates read and write operations within sockets, providing an abstracted interface for I/O operations that enables efficient buffering strategies, reducing system calls and improving overall transmission performance.

RDMA

RDMA allows one computer system's memory to directly access the memory of other computer systems without involving the central processing unit (CPU). This technology enables data transfers with high-throughput, low-latency, and low CPU utilization through key mechanisms like zero-copy (direct data transfer between network buffers, minimizing data copying between the network layers) and kernel bypass (data transfers directly from user-space without kernel involvement).

Before DolphinDB version 3.00.1, communication using RDMA technology had to be implemented through the IPoIB (IP over InfiniBand) Upper Layer Protocol provided by OFED. IPoIB enables IP traffic to run on InfiniBand networks and allows standard Socket programming without changes. It's the simplest method, but may not fully exploit RDMA's advantages in performance.

Starting from DolphinDB version 3.00.1, DolphinDB has modified its RPC framework to support RDMA Verbs natively by using its low-level API. By directly leveraging RDMA capabilities, this new change allows users to fully exploit RDMA performance benefits. This optimization is particularly beneficial for scenarios requiring high-speed, low-latency communication, such as querying distributed in-memory tables and processing remote procedure calls.

As with TCP/IP, the RDMA communication also uses the PROTOCOL_DDB protocol for data serialization and deserialization.

Note:

- DolphinDB RDMA currently only supports RPC calls within clusters on Linux. Stream processing is not supported.

- DolphinDB clients (including API, GUI, Web, etc.) do not currently support enabling RDMA. Their communication with cluster nodes uses the TCP/IP protocol.

Inner Workings

During intra-cluster transmission using RDMA,

- The client uses only epoll threads for event handling and data transmission, minimizing inter-thread communication cost. The number of these threads is controlled by the configuration parameter remoteExecutors.

- On the server side, the processing remains the same as in the TCP/IP scenario.

In terms of buffer management, DolphinDB implements an optimized approach. During the connection establishment phase, both communicating parties pre-register dedicated memory areas for RDMA transmissions, which remain fixed in size throughout the transmission. During communication, DolphinDB encapsulates the complex underlying RDMA operations, presenting them as non-blocking Socket semantics to the outside world. This allows for direct reading and writing to the RDMA registered memory, bypassing I/O streams. This method eliminates unnecessary data copying, improving transmission efficiency.

Protocol Negotiation

Regardless of whether TCP/IP or RDMA is used for data transmission, DolphinDB always establishes a TCP connection using IP addresses and ports during cluster communication:

- If the client enables RDMA, the current transmission framework first negotiates RDMA protocol usage and exchanges out-of-band data via the TCP connection. If the server hasn't enabled or doesn't support RDMA, the connection will fail.

- If the client hasn't enabled or doesn't support RDMA, but the server has RDMA enabled, the server will automatically fall back and reuse the established connection for subsequent communication.

| Server (RDMA Enabled) | Server (RDMA Disabled) | |

|---|---|---|

| Client (RDMA Enabled) | Can communicate using RDMA | Cannot communicate |

| Client (RDMA Disabled) | Communicate using TCP/IP | Communicate using TCP/IP |

Enabling RDMA in DolphinDB

The prerequisites for enabling RDMA:

- Using RDMA technology requires special network interface cards (NICs) and other hardware support. Consider the compatibility of RDMA NICs with existing hardware and ensure correct installation of the current operating system and drivers.

- The libibverbs library must be downloaded on all machines in the cluster.

- The enableRDMA configuration parameter must be enabled on all cluster nodes (controller, data nodes, compute nodes, and agent).

Once enabled, you can continue using the IP and port values previously set for TCP/IP connections. Existing scripts can be fully reused for RDMA transmission.

Note: Multi-NIC selection, NIC port selection, setting polling frequency, or configuring the size of pre-registered RDMA buffers are not supported.

TCP/IP vs. RDMA

TCP/IP and RDMA serve different purposes and are optimized for different types of communication. TCP/IP, the backbone of internet communication, offers versatility, robustness, and wide compatibility. RDMA, designed for high-performance computing, excels in low-latency, high-throughput scenarios, particularly for processing large datasets.

While TCP/IP is ideal for general network communication, RDMA is suitable for specialized scenarios requiring intensive data processing and network performance. RDMA, however, has specific hardware requirements, typically the RDMA NICs. They can be enabled at the same time in certain contexts to leverage the strengths of both technologies.

The following table shows the differences of TCP/IP and RDMA in different aspects:

| TCP/IP | RDMA | |

|---|---|---|

| Communication Mechanism | Connection-oriented | Transport modes defined by the InfiniBand specification: RC (Reliable Connected)/RD (Reliable Datagram)/UC (Unreliable Connected)/UD (Unreliable Datagram) |

| Data Transfer Method | Data copying, full-duplex communication | Zero-copy, direct memory access |

| Memory Access | Through operating system kernel | Bypasses operating system kernel |

| Programming Model | Socket API | Verbs API |

| Advantages | Widely compatible, flexible and robust | High throughput, low latency data transmission, with large-scale parallel processing support |

RDMA Performance Testing

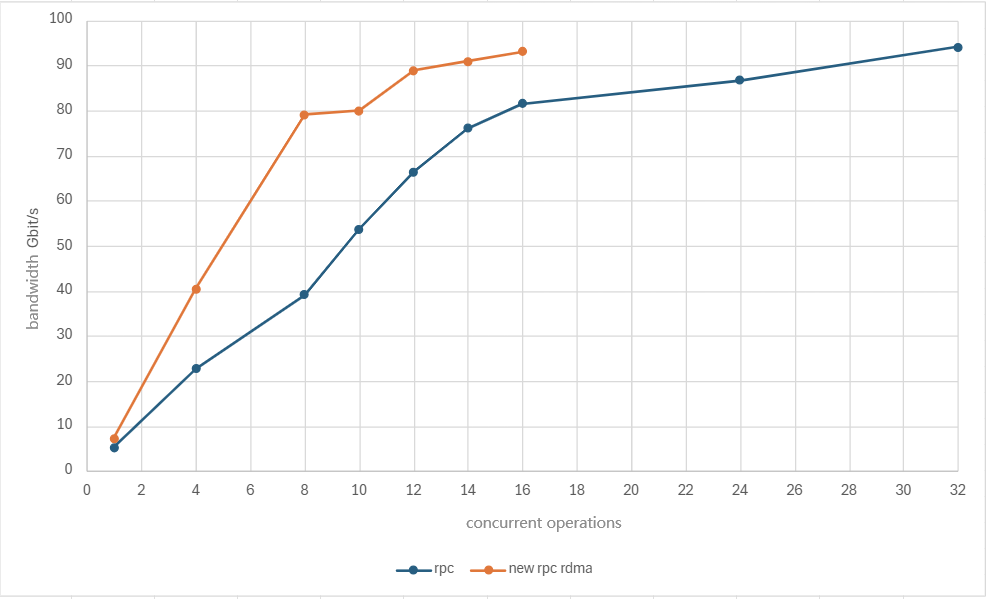

In scenarios with high network communication volume, enabling RDMA can lead to significant performance improvements.

The test case below illustrates RDMA's memory writing performance.

Test Scenario: Create an in-memory table on one node. Then initiate concurrent

queries (sent through submitJob) from another node using the

rpc function. The goal is to determine how many concurrent

operations are needed to achieve 100 Gbit/s bandwidth (The fewer concurrent

operations required, the better the performance).

Test Conditions:

- The in-memory table consists of 10 INT columns, 10 LONG columns, 10 FLOAT columns, and 10 DOUBLE columns, with a total size of approximately 3.8 GB.

Test Subjects:

- DolphinDB RPC using IPoIB

- DolphinDB RPC using native RDMA

Test Results (Measured in Gbit/s):

Conclusion:

As shown in the above plot, the NIC utilization with RDMA is twice that of IPoIB.

Related Functions and Configurations

Functions: getConnections

Configuration parameters: Inter-node Communication