Online Recovery

Online recovery repairs inconsistent or corrupted data replicas in a distributed file system (DFS). Unlike offline recovery, it can be performed while data is continuously being written, minimizing disrupting ongoing operations. DolphinDB's online recovery uses an incremental approach, copying only the differences between replicas, thus accelerating the recovery process.

Implementation

DolphinDB's online recovery adopts a multi-replica mechanism, transaction management and MVCC (Multi-Version Concurrency Control) to ensure data integrity.

In a distributed database, a table’s data in each partition (also known as “chunk“) is replicated across multiple data nodes. These identical copies are called replicas. Users define the number of replicas per chunk. Having at least two replicas allows recovery of a corrupted or inconsistent replica from an intact one. On startup, data nodes report their local replica information to the controller which compares it to its own chunk metadata. If inconsistencies are detected, the controller initiates an online recovery process where data is copied from a source node whose replica information matches the controller's metadata.

Each transaction in databases is assigned a unique, incrementing commit ID (CID). The system saves multiple transaction snapshots for each chunk, preserving database states at different time points. This enables multi-versioning through an MVCC mechanism. DolphinDB maintains these versions with a CID-based chain. Chunk inconsistencies typically result from missing transaction data. The online recovery process resolves these issues by copying differential data along the version chain, updating replicas to the latest state.

The online recovery process consists of the following two stages.

The Asynchronous Stage

The asynchronous recovery stage begins after the controller initiates a recovery request. The source node (with intact replica) identifies the maximum CID of the target node (with inconsistent replica) and copies the differential data. During this stage, the affected chunk continues handling new read and write operations.

To prevent an endless recovery loop caused by incoming transactions, the system switches to synchronous recovery under two conditions: when the remaining data to recover falls below a threshold (set by dfsSyncRecoveryChunkRowSize), or when the differential data has been copied 5 times.

The Synchronous Stage

During the synchronous recovery stage, writes to the affected chunk are temporarily blocked. The source node copies the remaining data to the target node. As only a small amount of data remains to be copied, the process usually finishes in less than a second, minimizing disruption to write operations on the chunk.

Process Overview

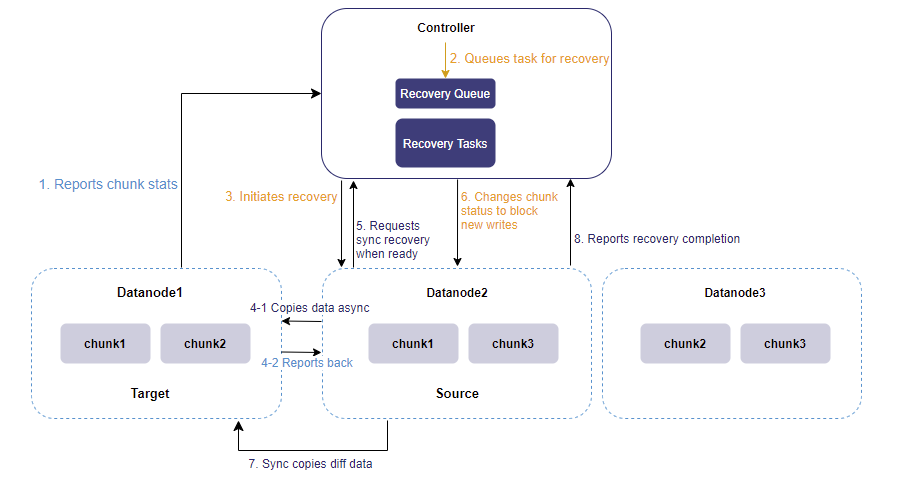

Full process of the online recovery:

-

Upon reboot, a data node reports its chunk statistics to the cluster controller.

-

The controller initiates recovery if needed, adding a recovery task to the recovery queue.

-

A dedicated recovery thread collects the task from the queue, identifies source node and target node, and sends a recovery request to the source node.

-

The source node copies differential data to the target node without blocking other operations. The target node reports back success or failure.

-

The previous step repeats up to 5 times or until the replica difference is less than 100,000 rows (set by dfsSyncRecoveryChunkRowSize). Then, the source node requests the controller to switch to synchronous recovery.

-

The controller marks the affected chunk as RECOVERING, blocking new writes to the chunk.

-

The source node continues differential data copying until the replicas are identical, then reports completion to the controller.

-

The controller changes chunk status to COMPLETE to allow new writes.

The following image shows a one-controller, three-data-node cluster with 2 replicas per chunk, illustrating Datanode1's recovery process after an error.

Benefits

Incremental data copying significantly reduces the recovery time.

The two-phase approach—non-blocking asynchronous replication followed by brief synchronous replication—minimizes business disruption.

Performance Tuning and Monitoring

DolphinDB offers tools for performance tuning, concurrency settings, and real-time monitoring to ensure efficient online recovery.

The configuration parameters and functions are as follows:

- dfsRecoveryConcurrency: The number of concurrent recovery tasks in a node recovery. The default value is twice the number of all nodes except the agent.

- recoveryWorkers: The number of workers that can be used to recover chunks

synchronously in node recovery. The default value is 1. Alternatively, call

resetRecoveryWorkerNumto configure the value dynamically. - getRecoveryTaskStatus: Get the status of recovery tasks.

- suspendRecovery: Suspend online node recovery processes.

- resumeRecovery: Resume suspended recovery processes in "Waiting" status.

- cancelRecoveryTask: Cancel the replica recovery jobs that have been submitted but have not begun execution.