Standalone Mode

The standalone mode helps users try out and get started with DolphinDB. To initiate this mode, run the executable file in the server directory of the installation package.

Start DolphinDB Server

- Linux

Navigate to the server directory and enter the following command in Shell to initiate the local node:

./dolphindb -localSite localhost:8900:local8900 - Windows

Method 1

Opendolphindb.exe in the server directory.

Method 2

Navigate to the server directory and enter the following command in Command Prompt:

dolphindb.exe -localSite localhost:8900:local8900

Start DolphinDB Terminal

DolphinDB terminal is a command line interactive tool for connecting to a remote DolphinDB server to execute commands.

To start the terminal:

- Linux

rlwrap -r ./dolphindb -remoteHost 192.168.1.135 -remotePort 8848 - Windows

dolphindb.exe -remoteHost 192.168.1.135 -remotePort 8848To quit the terminal:

quit

Some parameters can only be specified in the command line, as shown below:

| Parameter | Description |

|---|---|

| remoteHost | IP address of the remote DolphinDB server. |

| remotePort | Port number of the remote DolphinDB server. |

| stdoutLog | Where to output the system log. It can be:

|

| uid | User name of the remote DolphinDB server. |

| pwd | Password of the remote DolphinDB server. |

| run | The local DolphinDB script file sent to the remote

server for execution. This file is executed after the startup script

(startup.dos) is executed. By default, it is located in

<HomeDir>. Note: After the

execution is completed, the terminal will automatically exit. If

the script file is successfully executed, the system returns 0;

otherwise, it returns a non-zero value. |

| maxLogSize=1024 | The system will archive the server log after it reaches the specified size (in MB). The default value is 1024 and the minimum is 100. A prefix in the format of \date\seq is added to the original log name to form the archived log name, e.g. 20181109000. seq has 3 digits and starts with 000. |

| console=true | A Boolean value indicating whether to start a DolphinDB console. The default value is true. |

Other parameters that can only be specified in the command line (including home, logFile, config, clusterConfig, and nodesFile) will be explicitly noted in parameter description.

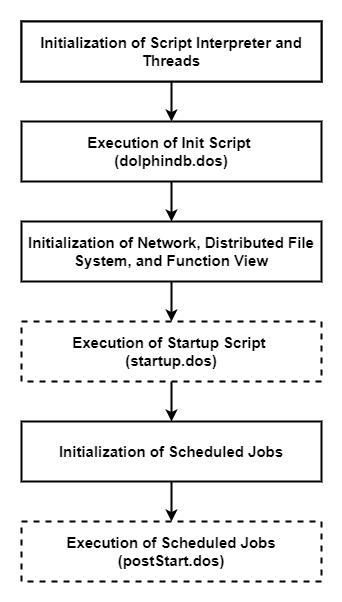

Startup Process

The startup process of the DolphinDB server is shown below:

The following three scripts are involved in the startup process:

| Parameter | Description |

|---|---|

| init=dolphindb.dos | This file is executed when the system starts. The default file is <HomeDir> /dolphindb.dos. It usually contains definitions of system-level functions that are visible to all users and cannot be overwritten. |

| startup=startup.dos | This file is executed after the system starts. The default file is <HomeDir> /startup.dos. It can be used to load plugins, load tables and share them, define and load stream tables, etc. |

| postStart=postStart.dos | This file is executed after scheduled jobs are initialized to load scheduled jobs. The default file is <HomeDir> /postStart.dos. |

DolphinDB also supports preloading modules and plugins at startup through the configuration parameter preloadModules.

- Plugin preloading: Specify preloadModules as

plugins::(plugin name), such asplugins::mysql. The system will load the plugin from pluginDir (file plugins by default). - Module preloading: Specify preloadModules as the file path relative to

moduleDir (file modules by default). For instance, specify the

parameter as

system::log::fileLogto preload the module located at system/log/fileLog.dos in moduleDir.

| Parameter | Description |

|---|---|

| preloadModules=plugins::mysql,system::log::fileLog | The modules or plugins that are loaded after the system starts. Use commas to separate multiple modules and plugins. |

Related Information