Single-Machine Cluster Deployment

This tutorial describes how to deploy the DolphinDB single-server cluster and update the cluster and license file. It serves as a quick start guide for you.

Deploy Single-Server Cluster on Linux OS

In this tutorial, you will learn how to deploy a basic single-server cluster. Use the config file from the installation package to deploy a single-server cluster with 1 controller, 1 agent, 1 data node and 1 compute node.

Step 1: Download

- Official website: DolphinDB

- Or you can download DolphinDB with a shell command:

wget https://www.dolphindb.com/downloads/DolphinDB_Linux64_V${release}.zip -O dolphindb.zip${release} refers to the version of DolphinDB server. For example, you can download Linux64 server 2.00.11.3 with the following command:

wget https://www.dolphindb.com/downloads/DolphinDB_Linux64_V2.00.11.3.zip -O dolphindb.zipTo download the ABI or JIT version of DolphinDB server, add "ABI" or "JIT" after the version number (linked with an underscore). For example, you can download Linux64 ABI server 2.00.11.3 with the following command:

wget https://www.dolphindb.com/downloads/DolphinDB_Linux64_V2.00.11.3_ABI.zip -O dolphindb.zipDownload Linux64 JIT server 2.00.11.3 with the following command:

wget https://www.dolphindb.com/downloads/DolphinDB_Linux64_V2.00.11.3_JIT.zip -O dolphindb.zip- Then extract the installation package to the specified directory (

/path/to/directory):

unzip dolphindb.zip -d </path/to/directory>Note: The directory name cannot contain any space characters, otherwise the startup of the data node will fail.

Step 2: Update License File

If you have obtained the Enterprise Edition license, use it to replace the following file:

/DolphinDB/server/dolphindb.licOtherwise, continue to use the community version, which allows up to 8 GB RAM use for 20 years.

Step 3: Start DolphinDB Cluster

Navigate to the folder /DolphinDB/server/. The file permissions need to be modified for the first startup. Execute the following shell command:

chmod +x dolphindbThen go to /DolphinDB/server/clusterDemo to start the controller and agent with no specified order.

- Start Controller

sh startController.sh- Start Agent

sh startAgent.shTo check whether the node was started, execute the following shell command:

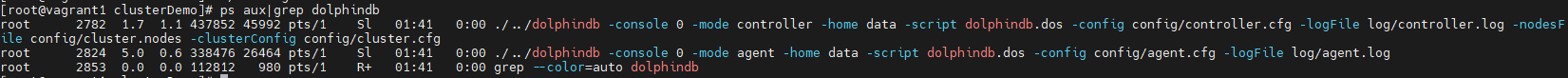

ps aux|grep dolphindbThe following information indicates a successful startup:

- Start Data Nodes and Compute Nodes

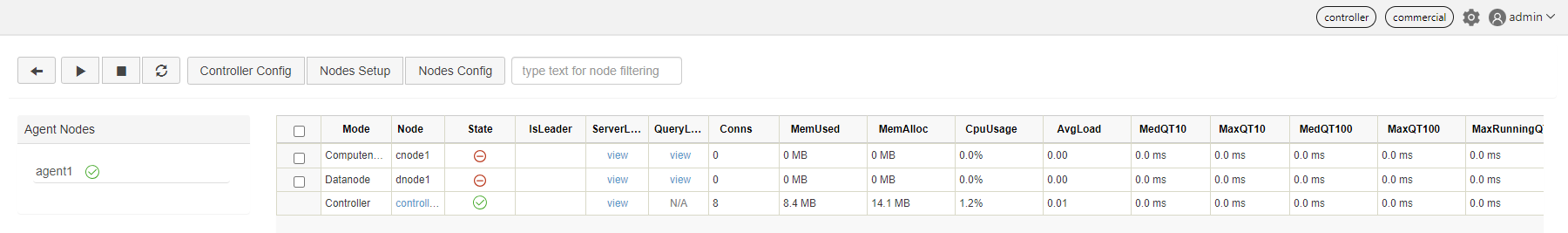

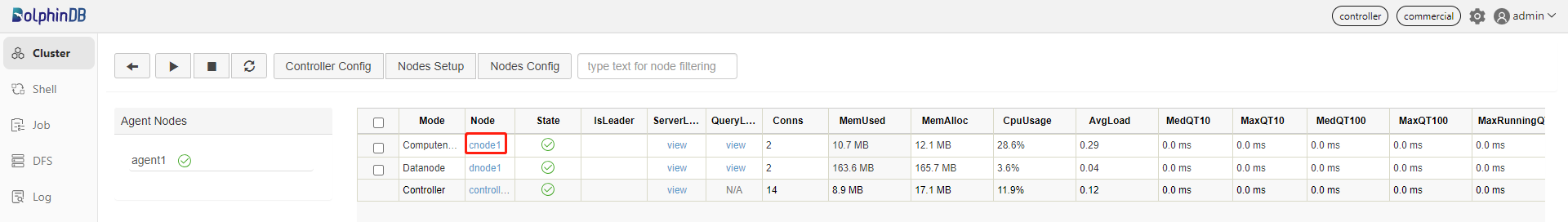

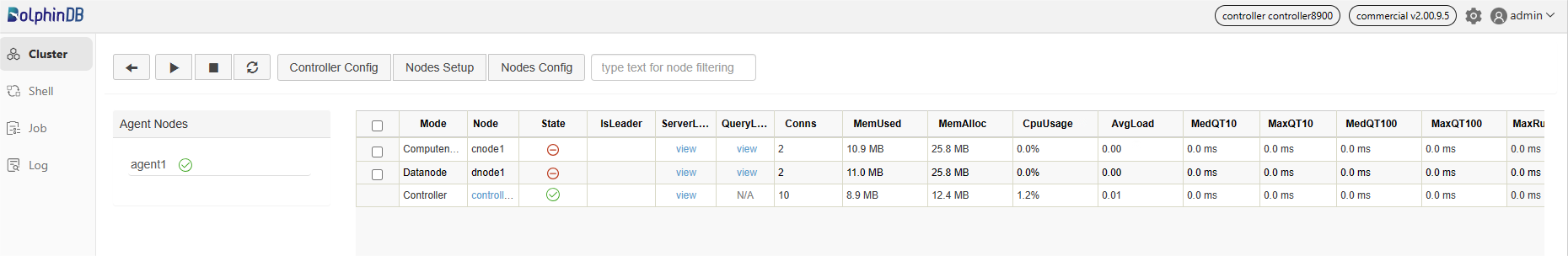

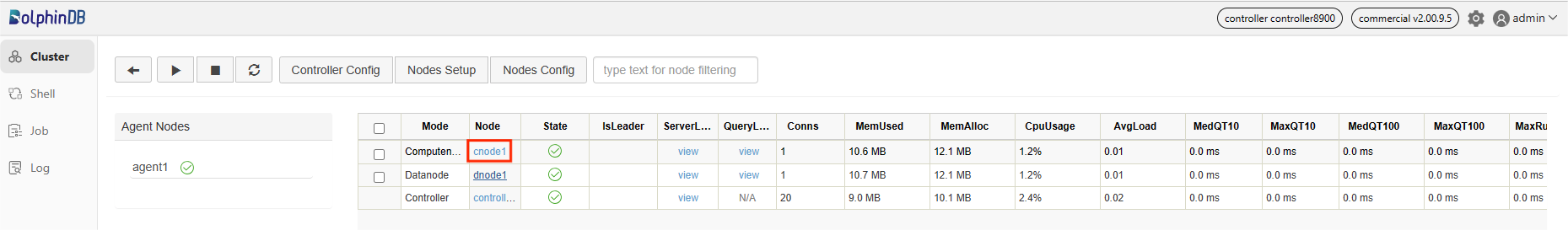

You can start or stop data nodes and compute nodes, and modify cluster configuration parameters on DolphinDB cluster management web interface. Enter the deployment server IP address and controller port number in the browser to navigate to the DolphinDB Web. The server address (ip:port) used in this tutorial is 10.0.0.80:8900. Below is the web interface. Log in with the default administrator account (username: admin, password: 123456). Then select the required data nodes and compute nodes, and click on the execute/stop button.

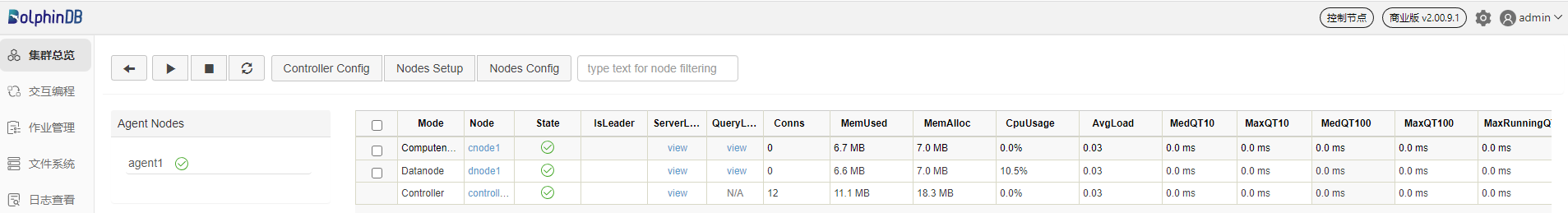

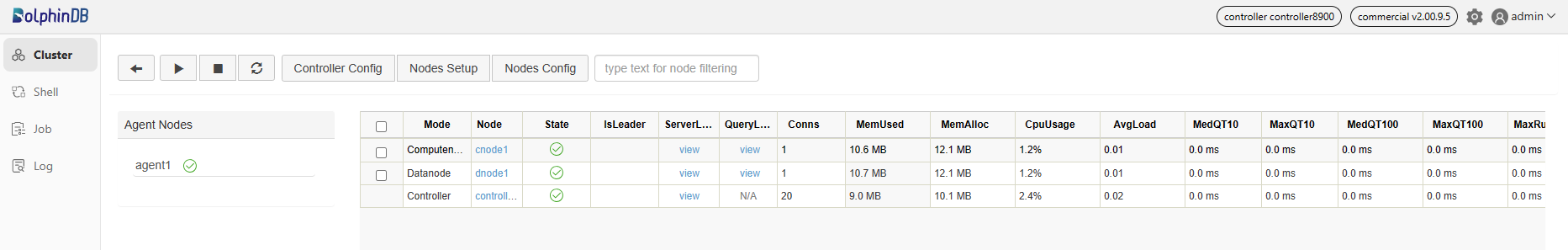

Click on the refresh button to check the status of the nodes. The following green check marks mean all the selected nodes have been turned on:

Note: If the browser and DolphinDB are not deployed on the same server, you should turn off the firewall or open the corresponding port beforehand.

Step 4: Create Databases and Partitioned Tables on Data Nodes

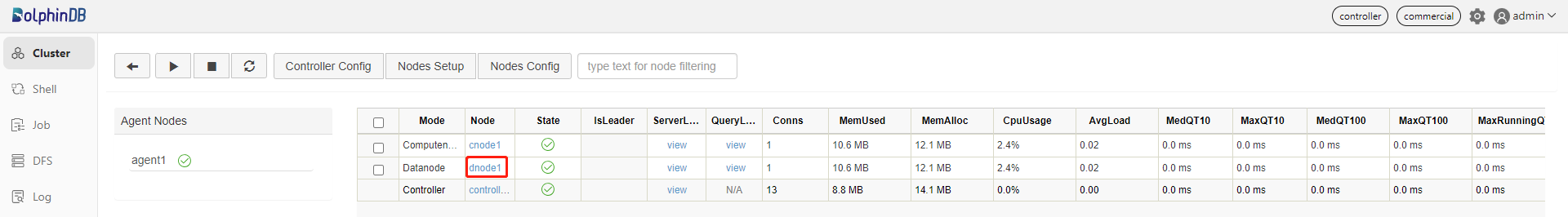

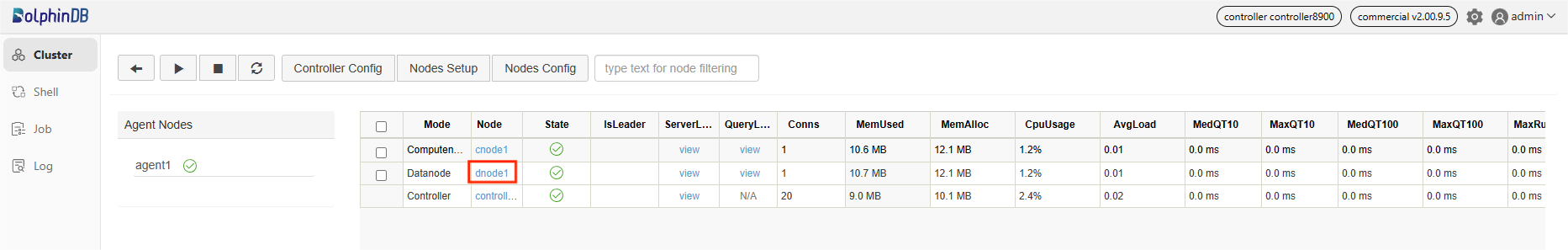

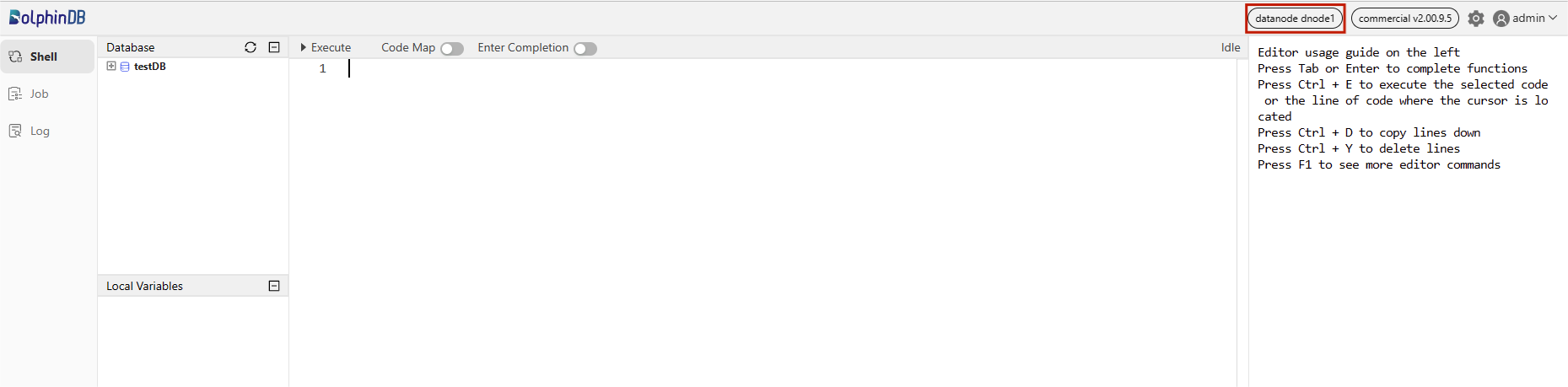

Data nodes can be used for data storage, queries and computation. The following example shows how to create databases and write data on data nodes. First, open the web interface of the Controller, and click on the corresponding Data node to open its Shell interface:

You can also enter IP address and port number of the data nodes in your browser to navigate to the Shell interface.

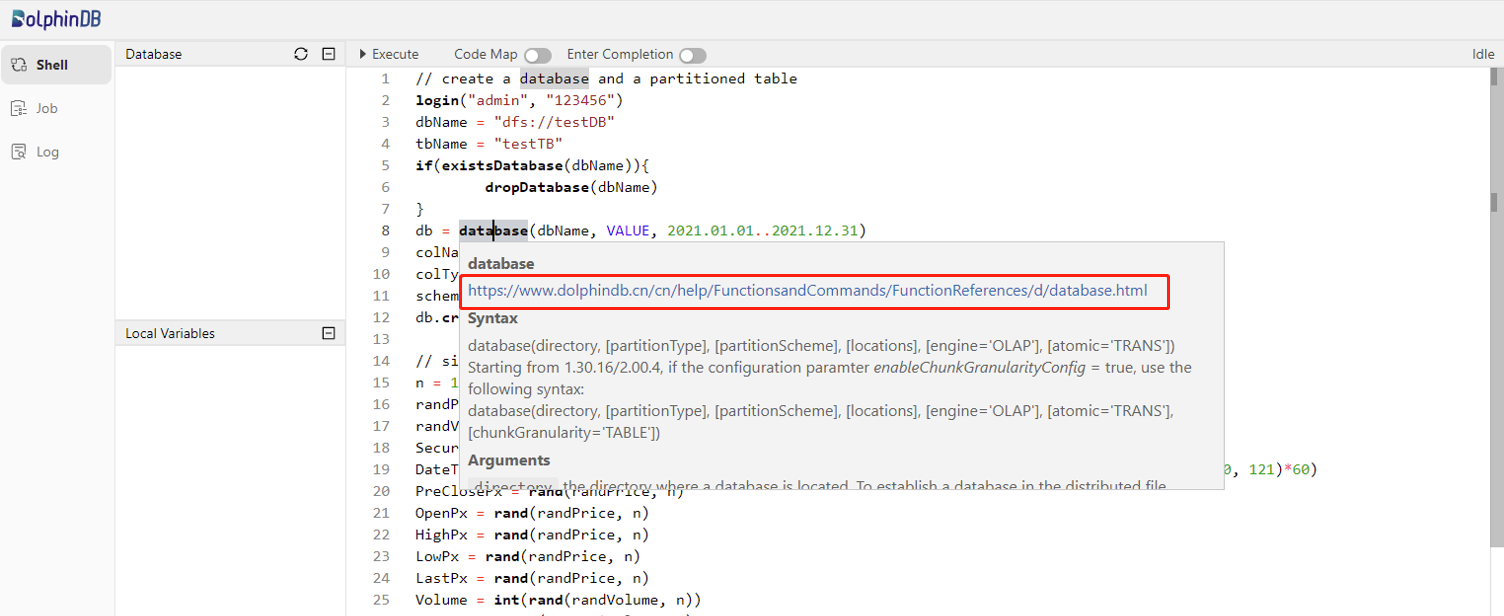

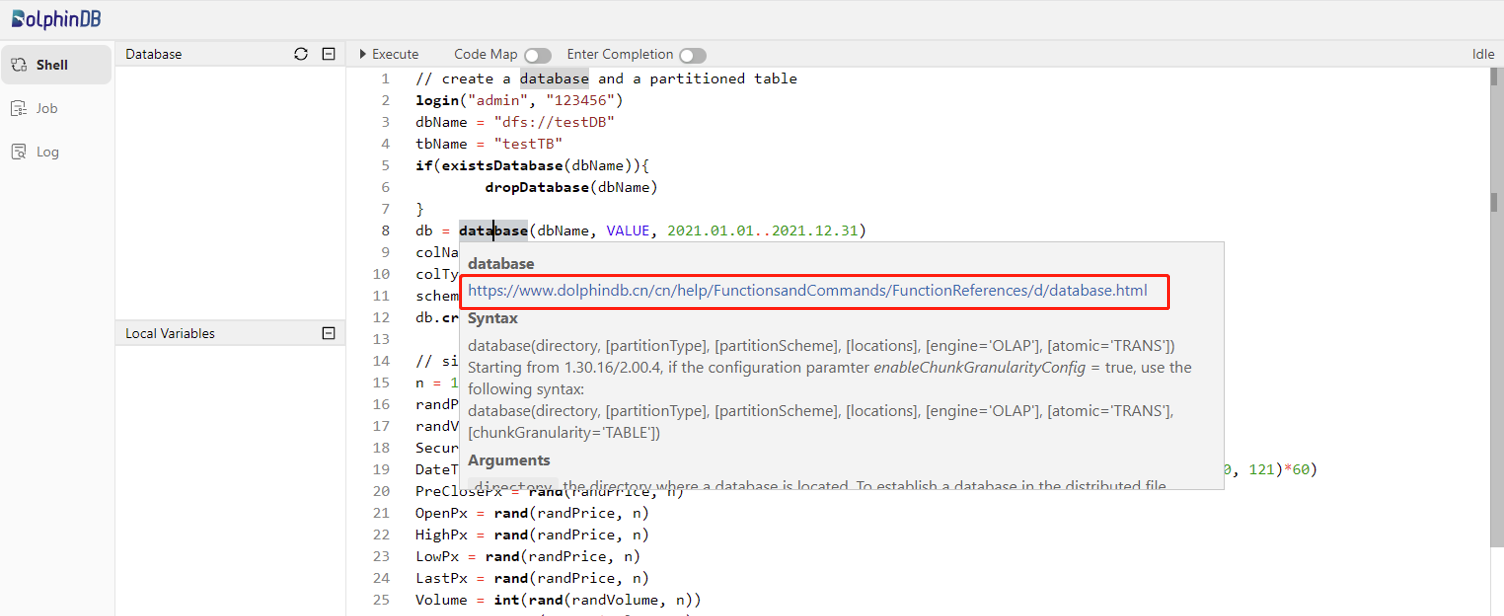

Execute the following script to create a database and a partitioned table:

//create a database and a partitioned table

login("admin", "123456")

dbName = "dfs://testDB"

tbName = "testTB"

if(existsDatabase(dbName)){

dropDatabase(dbName)

}

db = database(dbName, VALUE, 2021.01.01..2021.12.31)

colNames = `SecurityID`DateTime`PreClosePx`OpenPx`HighPx`LowPx`LastPx`Volume`Amount

colTypes = [SYMBOL, DATETIME, DOUBLE, DOUBLE, DOUBLE, DOUBLE, DOUBLE, INT, DOUBLE]

schemaTable = table(1:0, colNames, colTypes)

db.createPartitionedTable(table=schemaTable, tableName=tbName, partitionColumns=`DateTime)

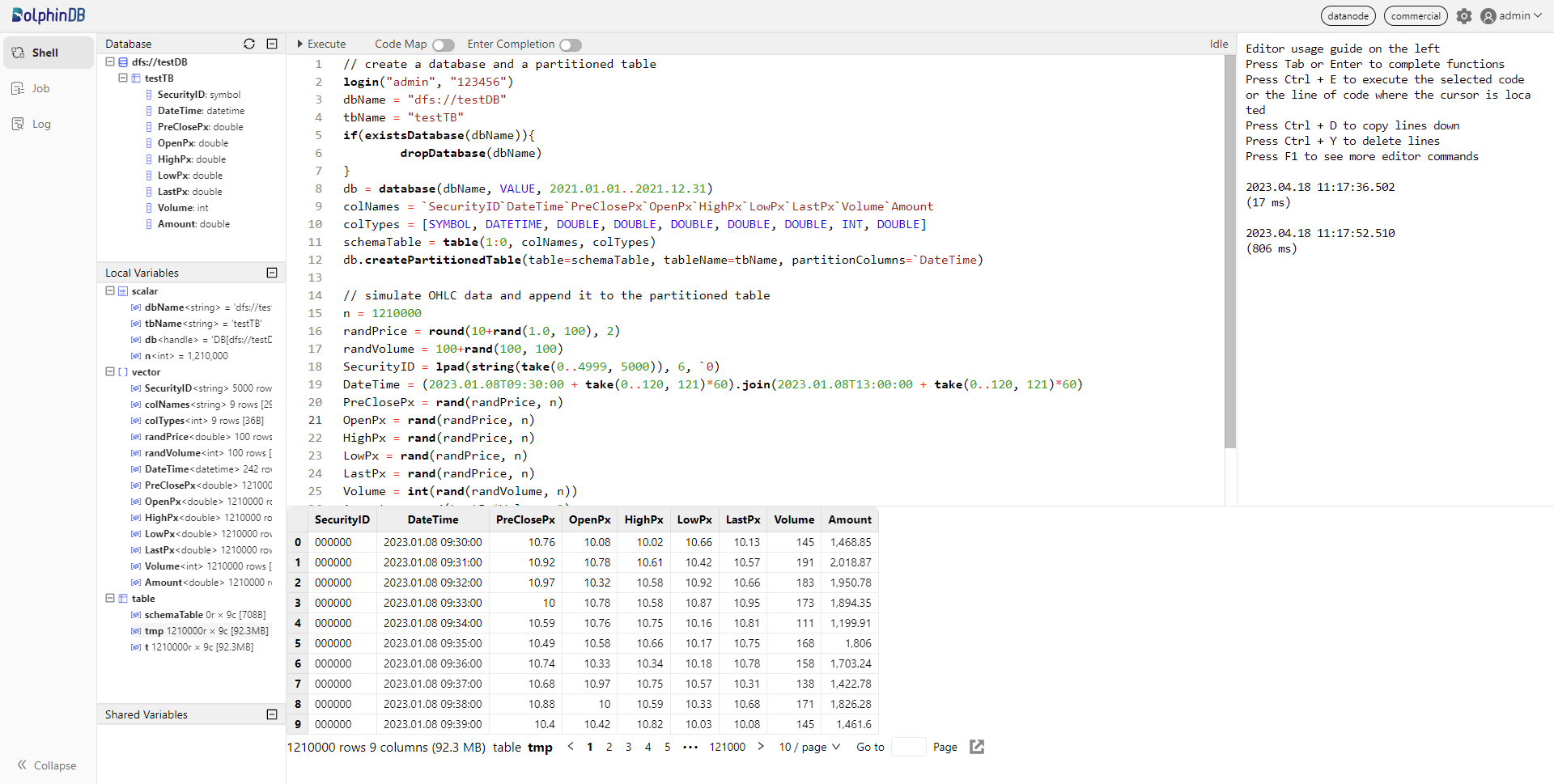

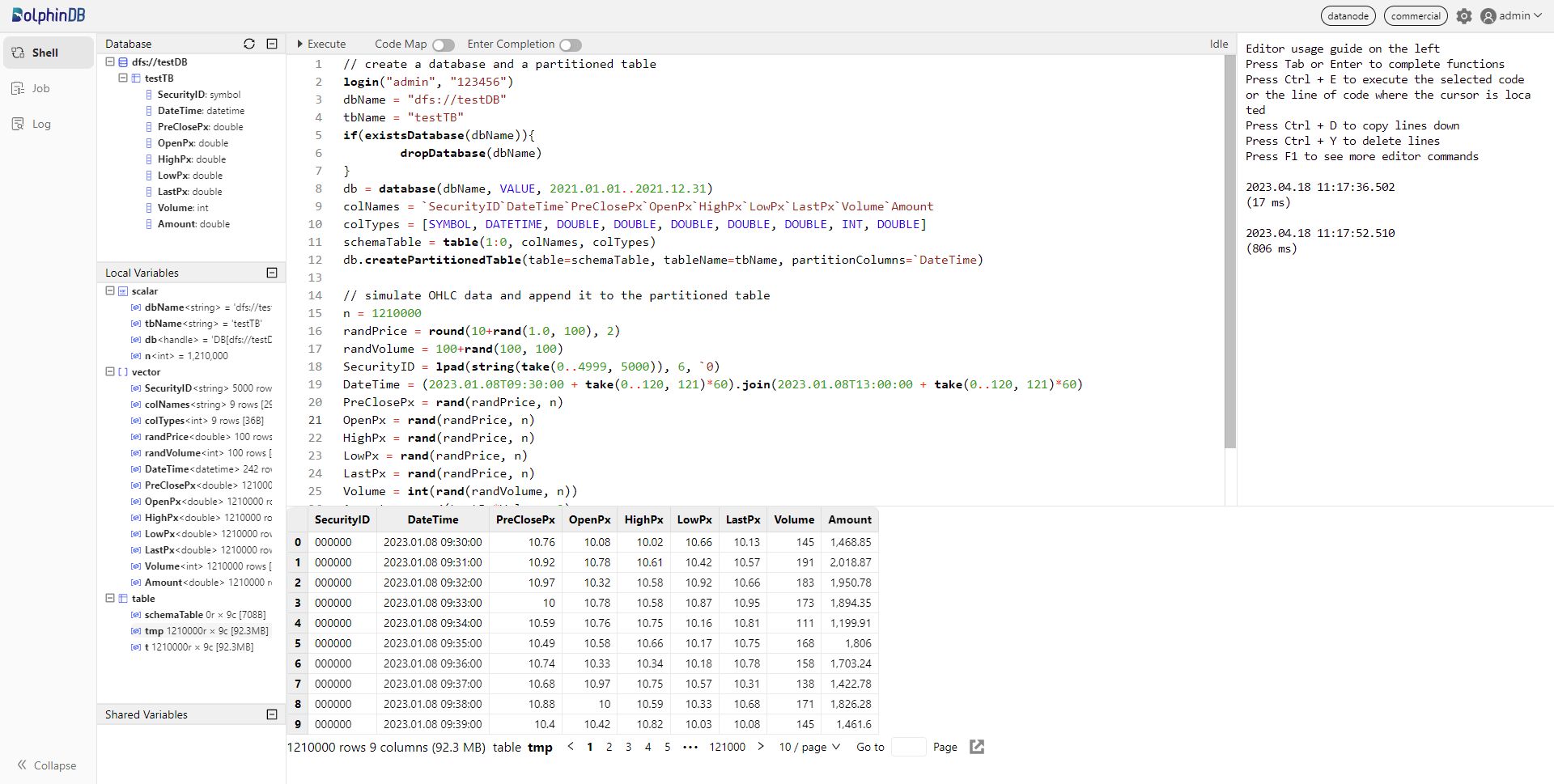

Then, run the following script to generate 1-minute OHLC bars and append the data to the created partitioned table "tbName":

//simulate OHLC data and append it to the partitioned table

n = 1210000

randPrice = round(10+rand(1.0, 100), 2)

randVolume = 100+rand(100, 100)

SecurityID = lpad(string(take(0..4999, 5000)), 6, `0)

DateTime = (2023.01.08T09:30:00 + take(0..120, 121)*60).join(2023.01.08T13:00:00 + take(0..120, 121)*60)

PreClosePx = rand(randPrice, n)

OpenPx = rand(randPrice, n)

HighPx = rand(randPrice, n)

LowPx = rand(randPrice, n)

LastPx = rand(randPrice, n)

Volume = int(rand(randVolume, n))

Amount = round(LastPx*Volume, 2)

tmp = cj(table(SecurityID), table(DateTime))

t = tmp.join!(table(PreClosePx, OpenPx, HighPx, LowPx, LastPx, Volume, Amount))

dbName = "dfs://testDB"

tbName = "testTB"

loadTable(dbName, tbName).append!(t)

For more details about the above functions, see Function References or the function documentation popup on the web interface.

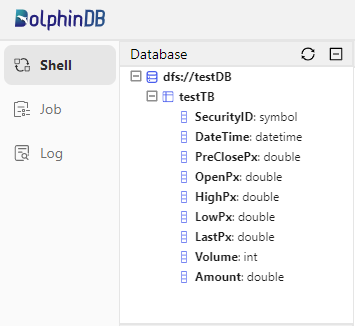

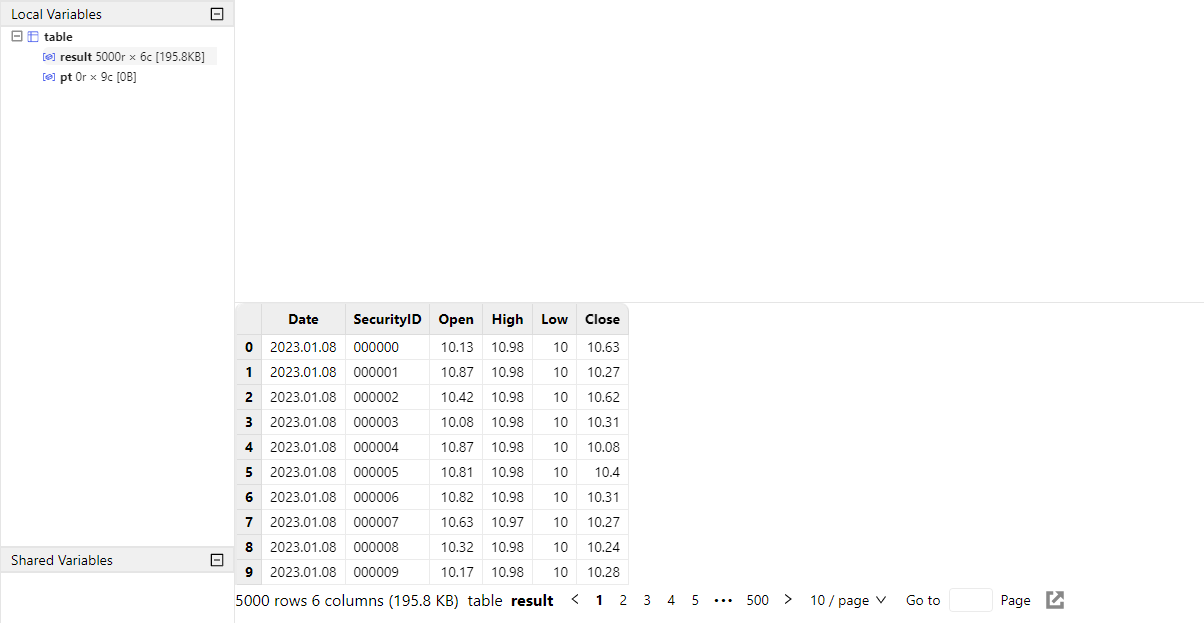

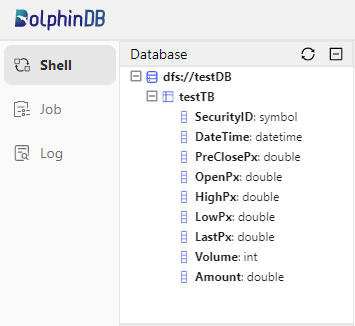

You can check the created database and table in the Database on the left side of the web interface.

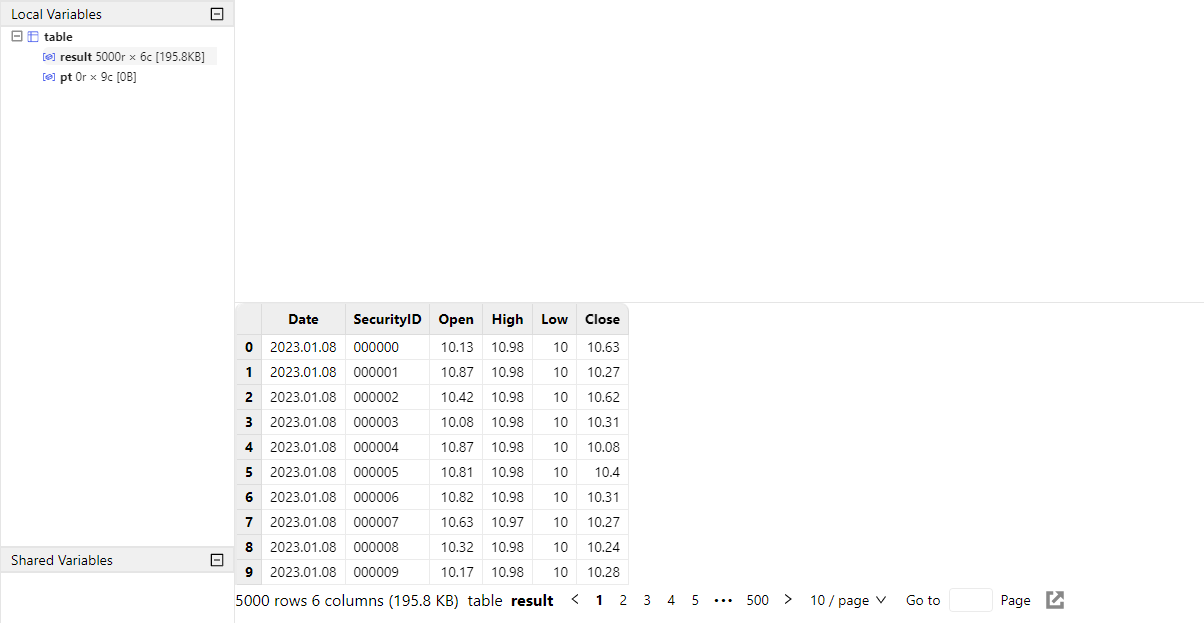

Variables you created can be checked in Local Variables. You can click on the corresponding variable name to preview the related information (including data type, size, and occupied memory size).

Step 5: Perform Queries and Computation on Compute Nodes

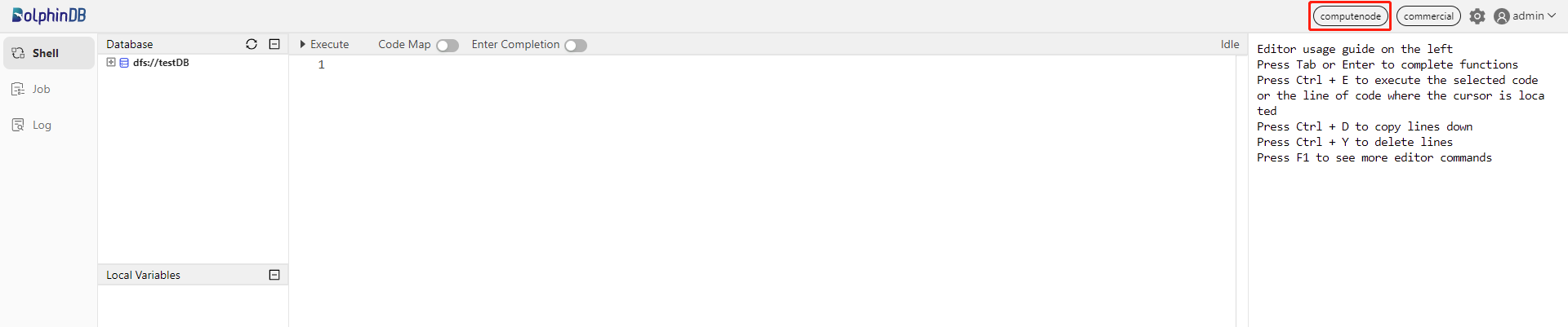

Compute nodes are used for queries and computation. The following example shows how to perform these operations in partitioned tables on a compute node.

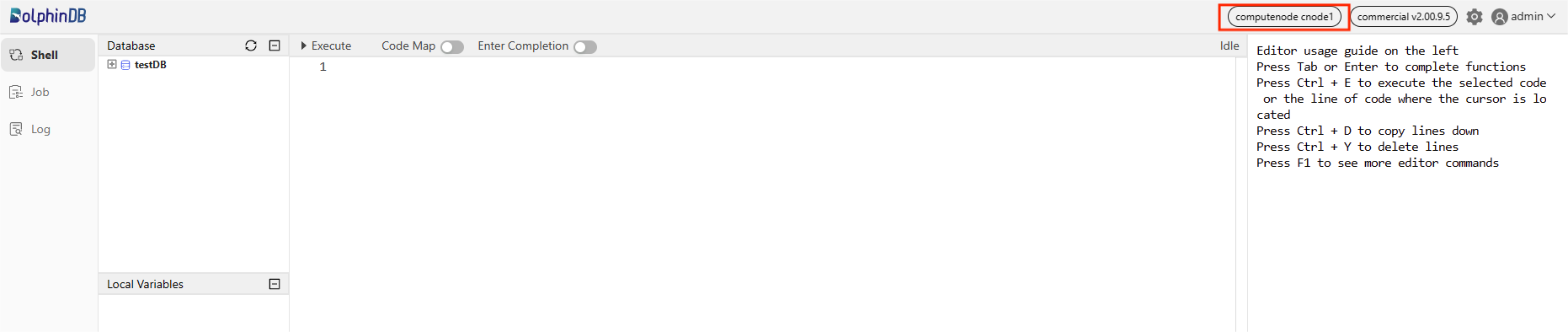

First, open the web interface of the Controller, and then click the corresponding Compute node to open its Shell interface:

You can also enter IP address and port number of the compute nodes in your browser to navigate to the Shell interface.

Execute the following script to load the partitioned table:

// load the partitioned table

pt = loadTable("dfs://testDB", "testTB")

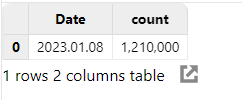

Note: Only metadata of the partitioned table is loaded here. Then execute the following script to count the number of the records for each day in table "pt":

//If the result contains a small amount of data, you can download it to display on the client directly.

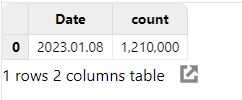

select count(*) from pt group by date(DateTime) as Date

The result will be displayed at the bottom of the web interface:

Execute the following script to calculate OHLC bars for each stock per day:

// If the result contains a large amount of data, you can assign it to a variable that occupies the server memory, and download it to display in seperate pages on the client.

result = select first(LastPx) as Open, max(LastPx) as High, min(LastPx) as Low, last(LastPx) as Close from pt group by date(DateTime) as Date, SecurityID

The result is assigned to the variable result. It will not be displayed on the client directly, thus reducing the memory of the client. To check the results, click on the result in the Local Variables.

Deploy Single-Server Cluster on Windows OS

In this tutorial, you will learn how to deploy a basic single-server cluster. Use the config file from the installation package to deploy a single-server cluster with 1 controller, 1 agent, 1 data node and 1 compute node.

Step 1: Download

- Official website: DolphinDB

- Extract the installation package to the specified directory (e.g., to C:\DolphinDB):

C:\DolphinDBNote: The directory name cannot contain any space characters, otherwise the startup of the data node will fail. For example, do not extract it to the Program Files folder on Windows.

Step 2: Update License File

If you have obtained the Enterprise Edition license, use it to replace the following file:

C:\DolphinDB\server\dolphindb.licOtherwise, continue to use the community version, which allows up to 8 GB RAM use for 20 years.

Step 3: Start DolphinDB Cluster

- Start Controller and Agent in Console Mode

Navigate to the folder C:\DolphinDB\server\clusterDemo. Then double click startController.bat and startAgent.bat.

- Start Controller and Agent in Background Mode

Navigate to the folder C:\DolphinDB\server\clusterDemo. Then double click backgroundStartController.vbs and backgroundStartAgent.vbs.

- Start Data Nodes and Compute Nodes

You can start or stop data nodes and compute nodes, and modify cluster configuration parameters on the web interface. Enter the deployment server IP address and controller port number in the browser to navigate to the DolphinDB Web. The server address (ip:port) used in this tutorial is 10.0.0.80:8900. Below is the web interface. Log in with the default administrator account (username: admin, password: 123456). Then select the required data nodes and compute nodes, and click on the execute/stop button.

Click on the refresh button to check the status of the nodes. The following green check marks mean all the selected nodes have been turned on:

Note: If the browser and DolphinDB are not deployed on the same server, you should turn off the firewall or open the corresponding port beforehand.

Step 4: Create Databases and Partitioned Tables on Data Nodes

Data nodes can be used for data storage, queries and computation. The following example shows how to create databases and write data on data nodes. First, open the web interface of the Controller, and click on the corresponding Data node to open its Shell interface:

You can also enter IP address and port number of the data nodes in your browser to navigate to the Shell interface.

Execute the following script to create a database and a partitioned table:

// create a database and a partitioned table

login("admin", "123456")

dbName = "dfs://testDB"

tbName = "testTB"

if(existsDatabase(dbName)){

dropDatabase(dbName)

}

db = database(dbName, VALUE, 2021.01.01..2021.12.31)

colNames = `SecurityID`DateTime`PreClosePx`OpenPx`HighPx`LowPx`LastPx`Volume`Amount

colTypes = [SYMBOL, DATETIME, DOUBLE, DOUBLE, DOUBLE, DOUBLE, DOUBLE, INT, DOUBLE]

schemaTable = table(1:0, colNames, colTypes)

db.createPartitionedTable(table=schemaTable, tableName=tbName, partitionColumns=`DateTime)

Then, run the following scripts to generate 1-minute OHLC bars and append the data to the created partitioned table "tbName":

// simulate OHLC data and append it to the partitioned table

n = 1210000

randPrice = round(10+rand(1.0, 100), 2)

randVolume = 100+rand(100, 100)

SecurityID = lpad(string(take(0..4999, 5000)), 6, `0)

DateTime = (2023.01.08T09:30:00 + take(0..120, 121)*60).join(2023.01.08T13:00:00 + take(0..120, 121)*60)

PreClosePx = rand(randPrice, n)

OpenPx = rand(randPrice, n)

HighPx = rand(randPrice, n)

LowPx = rand(randPrice, n)

LastPx = rand(randPrice, n)

Volume = int(rand(randVolume, n))

Amount = round(LastPx*Volume, 2)

tmp = cj(table(SecurityID), table(DateTime))

t = tmp.join!(table(PreClosePx, OpenPx, HighPx, LowPx, LastPx, Volume, Amount))

dbName = "dfs://testDB"

tbName = "testTB"

loadTable(dbName, tbName).append!(t)

For more details about the above functions, see Function References or the function documentation popup on the web interface.

You can check the created database and table in the Database on the left side of the web interface.

Variables you created can be checked in Local Variables. You can click on the corresponding variable name to preview the related information (including data type, size, and occupied memory size).

Step 5: Perform Queries and Computation on Compute Nodes

Compute nodes are used for queries and computation. The following example shows how to perform these operations in partitioned tables on a compute node. First, open the web interface of the Controller, and then click the corresponding Compute node to open its Shell interface:

You can also enter IP address and port number of the compute nodes in your browser to navigate to the Shell interface.

Execute the following script to load the partitioned table:

// load the partitioned table

pt = loadTable("dfs://testDB", "testTB")

Note: Only metadata of the partitioned table is loaded here. Then execute the following script to count the number of the records for each day in table "pt":

//If the result contains a small amount of data, you can download it to display on the client directly.

select count(*) from pt group by date(DateTime) as Date

The result will be displayed at the bottom of the web interface:

Execute the following script to calculate OHLC bars for each stock per day:

// If the result contains a large amount of data, you can assign it to a variable that occupies the server memory, and download it to display in seperate pages on the client.

result = select first(LastPx) as Open, max(LastPx) as High, min(LastPx) as Low, last(LastPx) as Close from pt group by date(DateTime) as Date, SecurityIDThe result is assigned to the variable result. It will not be displayed on the client directly, thus reducing the memory of the client. To check the results, click on the result in the Local Variables.

Upgrade DolphinDB Cluster

Upgrade on Linux

Step 1: Close all nodes

Navigate to the folder /DolphinDB/server/clusterDemo to execute the following command:

./stopAllNode.shStep 2: Back up the Metadata

The metadata file is created only when data is written to the single-server cluster. Otherwise, you can just skip this step.

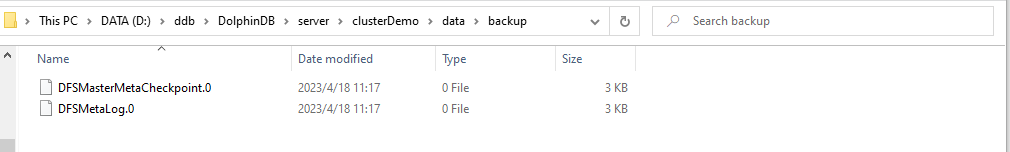

- Back up the Metadata of Controller

By default, the metadata of controller is stored in the DFSMetaLog.0 file under the folder /DolphinDB/server/clusterDemo/data:

/DolphinDB/server/clusterDemo/dataWhen the metadata exceeds certain size limits, a DFSMasterMetaCheckpoint.0 file will be generated.

Navigate to the folder /DolphinDB/server/clusterDemo/data to execute the following shell commands:

mkdir backup

cp -r DFSMetaLog.0 backup

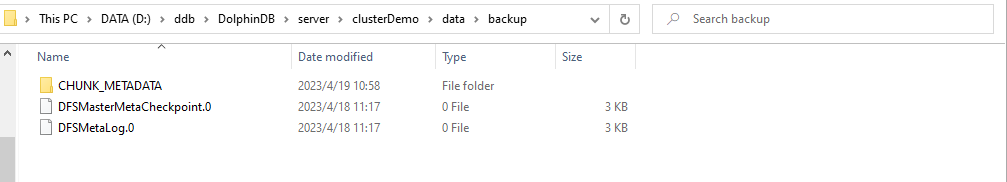

cp -r DFSMasterMetaCheckpoint.0 backup- Back up the Metadata of Data Nodes

By default, the metadata of data nodes is stored in the folder /DolphinDB/server/clusterDemo/data/<data node alias>/storage/CHUNK_METADATA. The default storage directory in this tutorial is:

/DolphinDB/server/clusterDemo/data/dnode1/storage/CHUNK_METADATANavigate to the above directory to execute the following shell command:

cp -r CHUNK_METADATA ../../backupNote: If the backup files are not in the above default directory, check the directory specified by the configuration parameters dfsMetaDir and chunkMetaDir. If the two parameters are not modified but the configuration parameter volumes is specified, then you can find the CHUNK_METADATA under the volumes directory.

Step 3: Upgrade

Note: When the server is upgraded to a certain version, the plugin should also be upgraded to the corresponding version.

- Online Upgrade

Navigate to the folder /DolphinDB/server/clusterDemo to execute the following command:

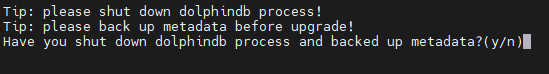

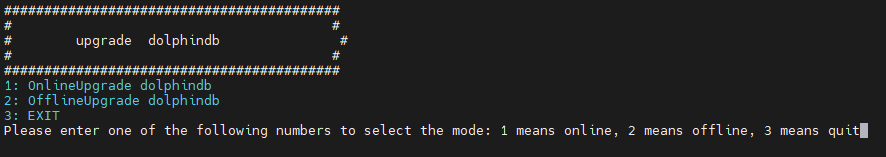

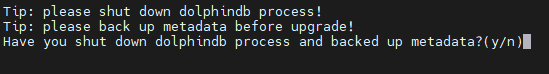

./upgrade.shThe following prompt is returned:

Type y and press Enter:

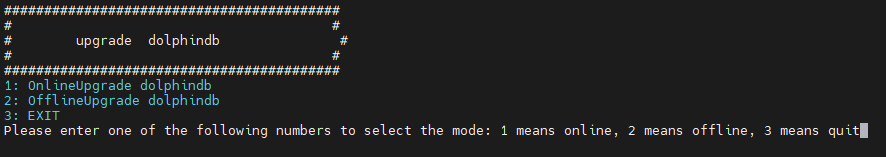

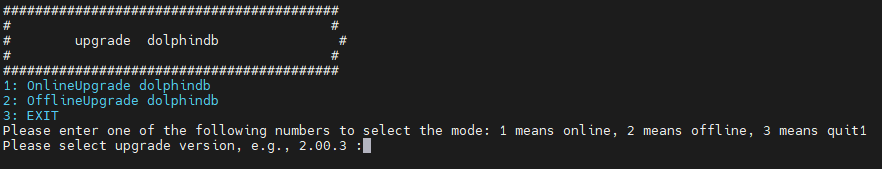

Type 1 and press Enter:

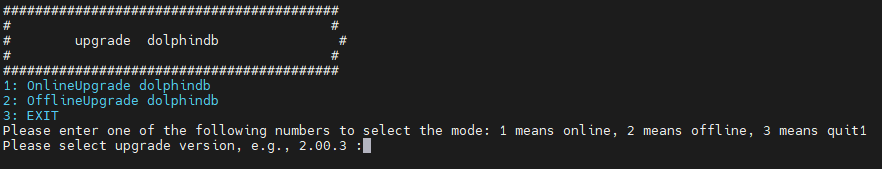

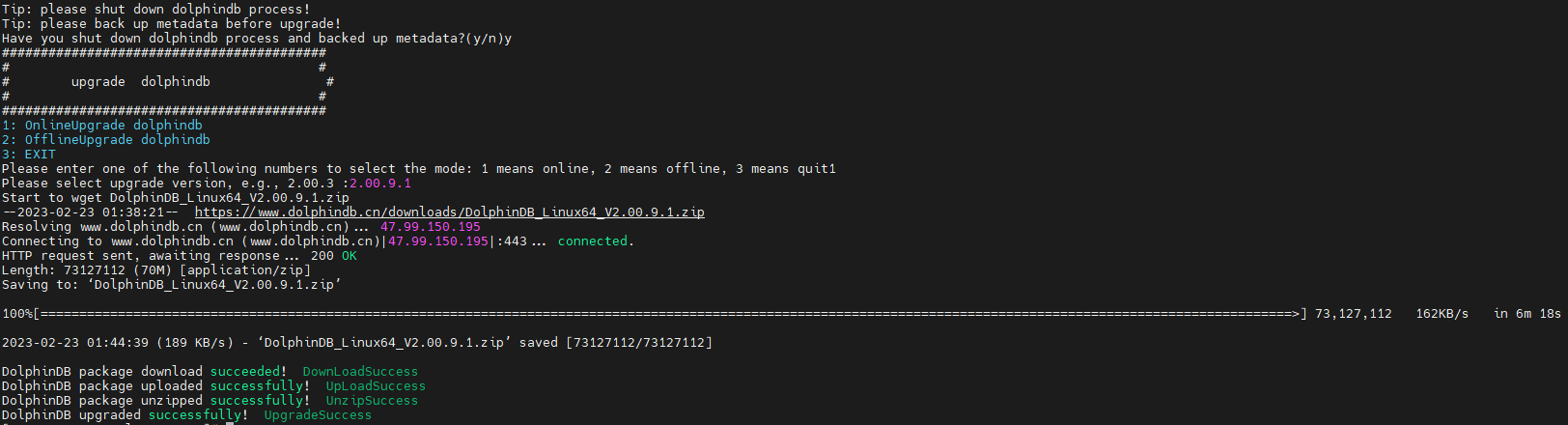

Type a version number and press Enter. To update to version 2.00.9.1, for example, type 2.00.9.1 and press Enter. The following prompt indicates a successful upgrade.

- Offline Upgrade

Download a new version of server package from DolphinDB website.

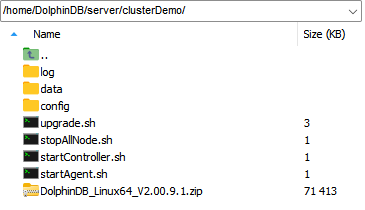

Upload the installation package to /DolphinDB/server/clusterDemo. Take version 2.00.9.1 as an example.

Navigate to the folder /DolphinDB/server/clusterDemo to execute the following command:

./upgrade.shThe following prompt is returned:

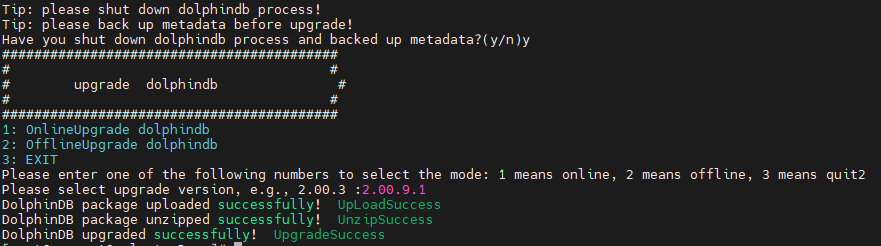

Type y and press Enter:

Type 2 and press Enter:

Type a version number and press Enter. To update to version 2.00.9.1, for example, type 2.00.9.1 and press Enter. The following prompt indicates a successful upgrade.

If the required update version is a JIT or ABI version, the version number should match the installation package. For example, if the installation package is named "DolphinDB_Linux64_V2.00.9.1_JIT.zip", the version number to be entered should be "2.00.9.1_JIT".

Step 4: Restart the Cluster

Navigate to folder /DolphinDB/server/clusterDemo to start the controller and agent with no specified order.

- Start Controller

sh startController.sh- Start Agent

sh startAgent.shOpen the web interface and execute the following script to check the current version of DolphinDB.

version()Upgrade on Windows

Step 1: Close all nodes

- In console mode, close the foreground process.

- In background mode, close DolphinDB process from Task Manager.

Step 2: Back up the Metadata

The metadata file is created only when data is written to the single-server cluster. Otherwise, you can just skip this step.

- Back up the Metadata of Controller

By default, the metadata of controller is stored in the DFSMetaLog.0 file under the folder C:\DolphinDB\server\clusterDemo\data:

C:\DolphinDB\server\clusterDemo\dataWhen the metadata exceeds certain size limits, a DFSMasterMetaCheckpoint.0 file will be generated.

Create a folder backup in this directory, then copy the DFSMetaLog.0 file and DFSMasterMetaCheckpoint.0 file to the folder:

- Back up the Metadata of Data Nodes

By default, the metadata of data nodes is stored in the folder C:\DolphinDB\server\clusterDemo\data\ <data node alias>\storage\CHUNK_METADATA. The default storage directory in this tutorial is:

C:\DolphinDB\server\clusterDemo\data\dnode1\storage\CHUNK_METADATACopy the CHUNK_METADATA file to the above backup folder:

Note: If the backup files are not in the above default directory, check the directory specified by the configuration parameters dfsMetaDir and chunkMetaDir. If the two parameters are not modified but the configuration parameter volumes is specified, then you can find the CHUNK_METADATA under the volumes directory.

Step 3: Upgrade

- Download a new version of installation package from DolphinDB website.

- Replace an existing files with all files (except dolphindb.cfg and dolphindb.lic) in the current \DolphinDB\server folder.

Note: When the server is upgraded to a certain version, the plugin should also be upgraded to the corresponding version.

Step 4: Restart the Cluster

- Start Controller and Agent in Console Mode:

Navigate to the folder C:\DolphinDB\server\clusterDemo. Then double click startController.bat and startAgent.bat.

- Start Controller and Agent in Background Mode

Navigate to the folder C:\DolphinDB\server\clusterDemo. Then double click backgroundStartController.vbs and backgroundStartAgent.vbs.

Open the web interface and execute the following script to check the current version of DolphinDB.

version()Update License File

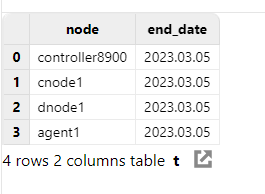

Before updating, open the web interface and execute the following code to check the expiration time:

use ops

updateAllLicenses()

Check the "end_date" to confirm whether the update is successful.

Step 1: Replace the License File

Replace an existing license file with a new one.

License file path on Linux:

/DolphinDB/server/dolphindb.licLicense file path on Windows:

C:\DolphinDB\server\dolphindb.licStep 2: Update License File

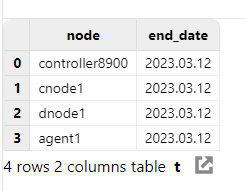

- Online Update

Open the web interface to execute the following script:

use ops

updateAllLicenses()The "end_date" is updated:

Note:

The client name of the license cannot be changed.

The number of nodes, memory size, and the number of CPU cores cannot be smaller than the original license.

The update takes effect only on the node where the function is executed. Therefore, in a cluster mode, the function needs to be run on all controllers, agents, data nodes, and compute nodes.

The license type must be either commercial (paid) or free.

- Offline Update

Restart DolphinDB cluster to complete the updates.

Cluster Configuration Files

The DolphinDB installer includes a built-in cluster configuration file, which can be used to configure a basic single-server cluster with 1 controller, 1 agent, 1 data node, and 1 compute node.

The cluster configuration file for Linux is located in the folder /DolphinDB/server/clusterDemo/config. The configuration file for Windows is in C:\DolphinDB\server\clusterDemo\config. For better performance, you need to manually modify the cluster configuration file (including controller.cfg, agent.cfg, cluster.nodes and cluster.cfg).

controller.cfg

Execute the following shell command to modify controller.cfg:

vim ./controller.cfgmode=controller

localSite=localhost:8900:controller8900

dfsReplicationFactor=1

dfsReplicaReliabilityLevel=2

dataSync=1

workerNum=4

localExecutors=3

maxConnections=512

maxMemSize=8

lanCluster=0

The default port number of the controller is 8900. You can specify its IP address, port number and alias by modifying the localSite parameter.

- For example, specify <ip>:<port>:<alias> as 10.0.0.80:8900:controller8900:

localSite=10.0.0.80:8900:controller8900Other parameters can be modified based on your own device.

agent.cfg

Execute the following shell command to modify agent.cfg:

vim ./agent.cfgmode=agent

localSite=localhost:8901:agent1

controllerSite=localhost:8900:controller8900

workerNum=4

localExecutors=3

maxMemSize=4

lanCluster=0

The controllerSite in agent.cfg must match the localSite parameter in controller's controller.cfg file. This is how the agent locates the cluster controller. Any changes to localSite in controller.cfg must be reflected in agent.cfg. The localSite parameter specifies the IP address, port number, and agent node alias. Except for localSite and controllerSite, other parameters in agent.cfg are optional and can be modified as needed based on specific requirements.

Login to the proxy node is only permitted after the sites parameter is properly set in agent.cfg. The sites parameter should include information about both the current agent and the controller in the cluster, detailing their IP addresses, port numbers, and node aliases. For example:

sites=10.0.0.80:8901:agent1:agent,10.0.0.80:8900:controller8900:controllerThe default port number of the agent is 8901. You can specify its IP address, port number and alias by modifying the localSite parameter. For example:

localSite=10.0.0.80:8901:agent1Other parameters can be modified based on your own device.

cluster.nodes

Execute the following shell command to modify cluster.nodes:

vim ./cluster.nodeslocalSite,mode

localhost:8901:agent1,agent

localhost:8902:dnode1,datanode

localhost:8903:cnode1,computenode

cluster.nodes is used to store information about agents, data nodes and compute nodes. The default cluster configuration file contains 1 agent, 1 data node and 1 compute node. You can configure the number of nodes as required. This configuration file specifies localSite and mode. The parameter localSite contains the node IP address, port number and alias, which are separated by colons ":". The parameter mode specifies the node type.

❗ Node aliases are case sensitive and must be unique in a cluster.

cluster.cfg

Execute the following shell command to modify cluster.cfg:

vim ./cluster.cfgmaxMemSize=32

maxConnections=512

workerNum=4

localExecutors=3

maxBatchJobWorker=4

OLAPCacheEngineSize=2

TSDBCacheEngineSize=1

newValuePartitionPolicy=add

maxPubConnections=64

subExecutors=4

lanCluster=0

enableChunkGranularityConfig=true

The configuration parameters in this file apply to each data node and compute node in the cluster. You can modify them based your own device.

FAQ

Q1: Failed to start the server for the port is occupied by other programs

If you cannot start the server, you can first check the log file of nodes under /DolphinDB/server/clusterDemo/log.

If the following error occurs, it indicates that the specified port is occupied by other programs.

<ERROR> :Failed to bind the socket on port 8900 with error code 98In such case, you can change to another free port in the config file.

Q2: Failed to access the web interface

Despite the server running and the server address being correct, the web interface remains inaccessible.

A common reason for the above problem is that the browser and DolphinDB are not deployed on the same server, and a firewall is enabled on the server where DolphinDB is deployed. You can solve this issue by turning off the firewall or by opening the corresponding port.

Q3: Roll back a failed upgrade on Linux

If you cannot start DolphinDB single-server cluster after upgrade, you can follow steps below to roll back to the previous version.

Step 1: Restore metadata files

Navigate to the folder /DolphinDB/server/clusterDemo/data to restore metadata files from backup with the following commands:

cp -r backup/DFSMetaLog.0 ./

cp -r backup/DFSMasterMetaCheckpoint.0 ./

cp -r backup/CHUNK_METADATA ./dnode1/storage

Step 2: Restore program files

Download the previous version of server package from the official website. Replace the server that failed to upgrade with all files (except dolphindb.cfg, clusterDemo and dolphindb.lic) just downloaded.

Q4: Roll back a failed upgrade on Windows

If you cannot start DolphinDB single-server cluster after upgrade, you can follow steps below to roll back to the previous version.

Step 1: Restore metadata files

Replace the DFSMetaLog.0 and DFSMasterMetaCheckpoint.0 files under C:\DolphinDB\server\clusterDemo\data with the corresponding backup files under the backup folder created before upgrade.

Replace the CHUNK_METADATA files under C:\DolphinDB\server\clusterDemo\data\dnode1\storage with the corresponding backup files under the backup folder created before upgrade.

Step 2: Restore program files

Download the previous version of server package from the official website. Replace the server that failed to upgrade with all files (except dolphindb.cfg, clusterDemo and dolphindb.lic) just downloaded.

Q5: Failed to update the license file

Updating the license file online has to meet the requirements listed in Step 2: Update License File.

If not, you can choose to update offline or apply for an Enterprise Edition License.

Q6: Change configuration

For more details on configuration parameters, refer to Configuration.

If you encounter performance problems, you can contact our team on Slack for technical support.