DolphinDB Database Cache Management and Cleanup

Database caching can significantly enhance the performance and responsiveness of DolphinDB. However, redundant or outdated cache may consume excessive memory resources and affect system efficiency. This article introduces how to efficiently clear the cache in DolphinDB to optimize resource utilization and overall system performance.

1. Cache Types

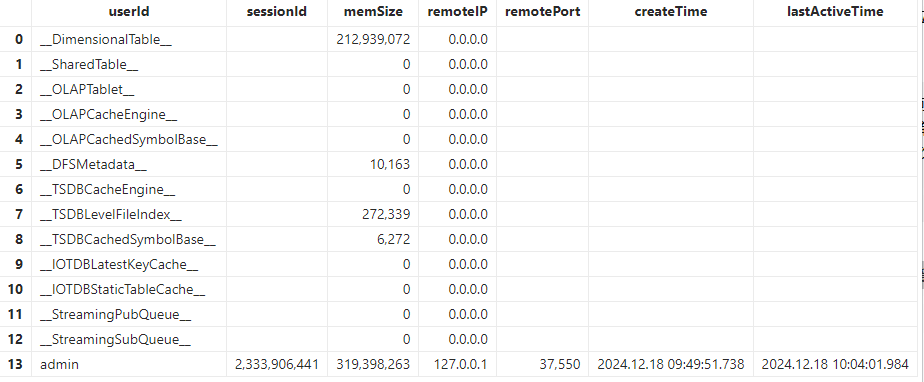

In DolphinDB, the memory usage on the current node can be obtained using the

getSessionMemoryStat function.

The various types of cache are described as follows:

| Cache Types | Meaning |

|---|---|

| __DimensionalTable__ | the cache of dimension tables (in Bytes) |

| __SharedTable__ | the cache of shared tables (in Bytes) |

| __OLAPTablet__ | the cache of the OLAP databases (in Bytes) |

| __OLAPCacheEngine__ | the memory usage of OLAP cache engine (in Bytes) |

| __OLAPCachedSymbolBase__ | the SYMBOL base cache in OLAP engine (in Bytes) |

| __DFSMetadata__ | the memory usage of DFS metadata in distributed storage (in Bytes) |

| __TSDBCacheEngine__ | the cache of the TSDB engine (in Bytes) |

| __TSDBLevelFileIndex__ | the cache of level file index in the TSDB engine (in Bytes) |

| __TSDBCachedSymbolBase__ | the SYMBOL base cache in TSDB engine (in Bytes) |

| __StreamingPubQueue__ | the unprocessed messages in a publisher queue |

| __StreamingSubQueue__ | the unprocessed messages in a subscriber queue |

2. Database Cache Cleanup

This section describes how to clear different types of database caches.

All examples in this document have been verified on DolphinDB version 3.00.2 deployed in single-node mode. To verify whether the cache has been successfully cleared, run the following command before and after the cleanup:

exec memSize from getSessionMemoryStat() where userId="__******__"2.1 Dimension Table Cache Cleanup

Dimension tables are unpartitioned tables in a distributed database. When querying data from a dimension table, all data is loaded into memory. They are suitable for storing small datasets that are infrequently updated.

There are several ways to clear dimension table caches:

- Automaticeviction: Since versions 2.00.11 and 1.30.23, DolphinDB introduces an automatic cache eviction mechanism. When overall memory usage exceeds the threshold specified by the system parameter warningMemSize, the system attempts to clear dimension table cache based on the Least Recently Used (LRU) policy.

- Manual cleanup: You can also manually clear the dimension table cache

by calling one of the following functions:

- Call the

clearCachedDatabasefunction. - Call the

clearAllCachefunction, which—starting from versions 2.00.11 and 1.30.23—also includes dimension table caches in its cleanup scope.

- Call the

The tables used in Examples 1, 2, and 3 in the following sections are generated by the script below:

dbNameOLAP = "dfs://test_OLAP"

dbNameTSDB = "dfs://test_TSDB"

if(existsDatabase(dbNameOLAP)){

dropDatabase(dbNameOLAP)

}

if(existsDatabase(dbNameTSDB)){

dropDatabase(dbNameTSDB)

}

t = table(1..10 as x, symbol(string(1..10)) as y)

dbOLAP = database(dbNameOLAP, VALUE, 1..10)

dbTSDB = database(dbNameTSDB, VALUE, 1..10, engine="TSDB")

createTable(dbOLAP, t, `dt1).append!(t)

createTable(dbOLAP, t, `dt2).append!(t)

createTable(dbOLAP, t, `dt3).append!(t)

createTable(dbTSDB, t, `dt1, sortColumns=`x).append!(t)

createTable(dbTSDB, t, `dt2, sortColumns=`x).append!(t)

createTable(dbTSDB, t, `dt3, sortColumns=`x).append!(t)Example 1. Clear cache of a specific dimension table.

// Generate dimension table cache

select * from loadTable(dbOLAP, `dt1)

select * from loadTable(dbTSDB, `dt1)

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__DimensionalTable__"

// Clear dimension table cache

pnodeRun(clearCachedDatabase{dbNameOLAP, `dt1})

pnodeRun(clearCachedDatabase{dbNameTSDB, `dt1})

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__DimensionalTable__"Example 2. Clear cache of all tables within a specified database.

// Generate dimension table cache

select * from loadTable(dbNameOLAP, `dt1)

select * from loadTable(dbNameOLAP, `dt2)

select * from loadTable(dbNameOLAP, `dt3)

select * from loadTable(dbNameTSDB, `dt1)

select * from loadTable(dbNameTSDB, `dt2)

select * from loadTable(dbNameTSDB, `dt3)

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__DimensionalTable__"

// Clear all cache

pnodeRun(clearCachedDatabase{dbNameOLAP})

pnodeRun(clearCachedDatabase{dbNameTSDB})

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__DimensionalTable__"Example 3. Clear dimension table caches by invoking the

clearAllCache function.

// Generate dimension table cache

select * from loadTable(dbNameOLAP, `dt1)

select * from loadTable(dbNameOLAP, `dt2)

select * from loadTable(dbNameOLAP, `dt3)

select * from loadTable(dbNameTSDB, `dt1)

select * from loadTable(dbNameTSDB, `dt2)

select * from loadTable(dbNameTSDB, `dt3)

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__DimensionalTable__"

// Clear all cache

pnodeRun(clearAllCache)

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__DimensionalTable__"2.2 Shared Table Cache Cleanup

By default, in-memory tables and other local objects are session-scoped and

cannot be accessed from other sessions. To share a table across sessions, use

the share function to register it in the global namespace.

When a shared table variable is no longer needed, you can remove it from the

global namespace and free the associated memory by calling the

undef function with objType=SHARED.

Example 4. Release a specific shared table variable.

// Share a table

t = table(1..10 as x, 1..10 as y)

share t as st

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__SharedTable__"

// Release the shared table

undef(`st, SHARED)

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__SharedTable__"2.3 OLAP Storage Engine Read Cache

The OLAP Storage engine automatically caches historical data that has been queried, enabling subsequent queries on the same data to be served from memory. Read caches for partitioned tables are shared across sessions to improve memory efficiency. Memory management for distributed tables has the following characteristics:

- The OLAP engine manages memory at the granularity of individual columns within each partition.

- Data is only loaded onto the local node where it resides and is not transferred between nodes.

- Cached partition data is shared among users querying the same partition.

- When total memory usage remains below the warningMemSize threshold, the engine retains as much cached data as possible to optimize query performance.

You can clear the OLAP read cache in the following ways:

- When total memory usage reaches the warningMemSize threshold, the system automatically evicts cached data using an LRU (Least Recently Used) policy.

- You can also manually clear the cache using the

clearAllCacheorclearCachedDatabasefunctions.

The tables used in Example 5 and Example 6 are generated by the following script:

dbName = "dfs://test"

if(existsDatabase(dbName)){

dropDatabase(dbName)

}

t = table(1..10 as x, symbol(string(1..10)) as y)

db = database(dbName, VALUE, 1..10)

pt1 = createPartitionedTable(db, t, `pt1, `x).append!(t)

pt2 = createPartitionedTable(db, t, `pt2, `x).append!(t)

pt3 = createPartitionedTable(db, t, `pt3, `x).append!(t)Example 5. Clearing OLAP read caches using clearAllCache.

// Load OLAP engine read cache by querying the table

select * from loadTable(dbName, `pt1)

select * from loadTable(dbName, `pt2)

select * from loadTable(dbName, `pt3)

//output: 240

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__OLAPTablet__"

// Clear OLAP engine caches

pnodeRun(clearAllCache)

//output: 0

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__OLAPTablet__"

Example 6. Clearing OLAP read caches using

clearCachedDatabase.

// Load OLAP engine read cache by querying the table

select * from loadTable(dbName, `pt1)

select * from loadTable(dbName, `pt2)

select * from loadTable(dbName, `pt3)

//output: 240

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__OLAPTablet__"

// Clear OLAP engine caches

pnodeRun(clearCachedDatabase{dbName})

//output: 0

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__OLAPTablet__"2.4 OLAP Storage Engine Write Cache

The cache engine in DolphinDB is a write buffer designed to improve write throughput. It follows a standard approach of first writing to a redo log and write cache, then flushing data to disk in batches once a threshold is reached.

Garbage collection (GC) of the cache engine completes once the data is flushed to disk successfully. The cache engine is reclaimed:

- Scheduled check every 30 seconds to determine whether data should be flushed to disk.

- A threshold-based reclaim: when data in the cache reaches 30% of the OLAPCacheEngineSize, it is written to the column file on the disk.

- or manually via the

flushOLAPCache.

Note: flushOLAPCache is executed asynchronously, meaning

that memory is not freed immediately after the call. You can use

getOLAPCacheEngineSize to monitor the current memory usage

of the cache engine.

Example 7. Releasing write caches using flushOLAPCache.

// Write data to OLAP cache engine

dbName = "dfs://test"

if(existsDatabase(dbName)){

dropDatabase(dbName)

}

t = table(1..10 as x, symbol(string(1..10)) as y)

db = database(dbName, VALUE, 1..10)

dt1 = createTable(db, t, `dt1).append!(t)

dt2 = createTable(db, t, `dt2).append!(t)

dt3 = createTable(db, t, `dt3).append!(t)

pt1 = createPartitionedTable(db, t, `pt1, `x).append!(t)

pt2 = createPartitionedTable(db, t, `pt2, `x).append!(t)

pt3 = createPartitionedTable(db, t, `pt3, `x).append!(t)

//output: 480

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__OLAPCacheEngine__"

// Flush committed transactions from the OLAP cache to the disk

pnodeRun(flushOLAPCache)

sleep(1000)

//output: 0

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__OLAPCacheEngine__"2.5 OLAP Storage Engine SYMBOL Base Cache

In the OLAP engine, when a distributed table contains data of SYMBOL type, the system maintains a SYMBOL base — a mapping between each symbol and its corresponding internal ID (as only IDs are physically stored). During read and write operations, this symbol dictionary is cached to improve performance.

This caching is reflected in session memory statistics: the read cache is

reported under the "_OLAPTablet_", while the write cache corresponds to the

"_OLAPCachedSymbolBase_" in the output of getSessionMemoryStat.

To manage memory efficiently, the system periodically evicts entries from "_OLAPCachedSymbolBase_". A cached SYMBOL base is eligible for eviction if both of the following conditions are met:

- It has not been accessed for over 5 minutes.

- The associated data is neither in the cache engine nor involved in any active transaction.

2.6 TSDB Storage Engine Write Cache

The TSDB engine includes a write cache mechanism that performs in-memory sorting of newly written data before persisting it to disk. The cache engine automatically flushes the buffer under either of the following conditions:

- When the cache reaches 50% of its configured capacity.

- After 10 minutes have elapsed.

The cache remains writable during flushing and only stops accepting new data when the buffer is completely full.

You can also trigger a manual flush using the flushTSDBCache

function, which forces completed transactions in the buffer to be written to

disk, thereby releasing memory.

Example 8. Releasing memory used by the TSDB cache engine with

flushTSDBCache.

// Write data to TSDB cache engine

dbName = "dfs://test"

if(existsDatabase(dbName)){

dropDatabase(dbName)

}

t = table(1..10 as x, symbol(string(1..10)) as y)

db = database(dbName, VALUE, 1..10, engine="TSDB")

dt1 = createTable(db, t, `dt1, sortColumns=`x).append!(t)

dt2 = createTable(db, t, `dt2, sortColumns=`x).append!(t)

dt3 = createTable(db, t, `dt3, sortColumns=`x).append!(t)

pt1 = createPartitionedTable(db, t, `pt1, `x, sortColumns=`x).append!(t)

pt2 = createPartitionedTable(db, t, `pt2, `x, sortColumns=`x).append!(t)

pt3 = createPartitionedTable(db, t, `pt3, `x, sortColumns=`x).append!(t)

//output: 7560

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__TSDBCacheEngine__"

// Flush committed transactions from the TSDB cache to the disk

pnodeRun(flushTSDBCache)

//output: 0

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__TSDBCacheEngine__"

2.7 Index Caching for TSDB Level Files

Unlike the OLAP engine, which stores data in column files, the TSDB engine writes data into level files—each of which containing metadata, data blocks, and indexes for these data blocks.

When querying data from TSDB tables, the engine first loads the index of each level file in the queried partition into memory and uses it to selectively load relevant data blocks. This approach allows efficient data retrieval, so only the index data is cached instead of the entire partition.

To manage memory usage, the TSDB engine provides the following index cache cleanup mechanisms:

- Automatic eviction: Controlled by the TSDBLevelFileIndexCacheSize configuration, which defines the maximum index cache size (in GB). By default, it's set to 5% of maxMemSize, with a minimum of 0.1 GB. When the cache exceeds the limit, the system automatically evicts the least recently used 5% of index entries.

- Manual cleanup: You can also manually clear the cache using one of

the following functions:

- Call

invalidateLevelIndexCache. - Call

clearAllCache, which—starting from versions 2.00.13 and 3.00.1—also includes the index cache for TSDB level files.

- Call

The tables used in Examples 9 and 10 are created by the following script.

dbName = "dfs://test"

if(existsDatabase(dbName)){

dropDatabase(dbName)

}

t = table(1..10 as x, symbol(string(1..10)) as y)

db = database(dbName, VALUE, 1..10, engine="TSDB")

dt1 = createTable(db, t, `dt1, sortColumns=`x)

dt2 = createTable(db, t, `dt2, sortColumns=`x)

dt3 = createTable(db, t, `dt3, sortColumns=`x)

pt1 = createPartitionedTable(db, t, `pt1, `x, sortColumns=`x)

pt2 = createPartitionedTable(db, t, `pt2, `x, sortColumns=`x)

pt3 = createPartitionedTable(db, t, `pt3, `x, sortColumns=`x)Example 9. Clearing the TSDB index caches using

invalidateLevelIndexCache.

dt1.append!(t)

dt2.append!(t)

dt3.append!(t)

pt1.append!(t)

pt2.append!(t)

pt3.append!(t)

pnodeRun(flushTSDBCache)

//output: 12510

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__TSDBLevelFileIndex__"

pnodeRun(invalidateLevelIndexCache)

//output: 0

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__TSDBLevelFileIndex__"Example 10. Clearing the TSDB level file index cache using

clearAllCache.

dt1.append!(t)

dt2.append!(t)

dt3.append!(t)

pt1.append!(t)

pt2.append!(t)

pt3.append!(t)

pnodeRun(flushTSDBCache)

//output: 12510

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__TSDBLevelFileIndex__"

pnodeRun(clearAllCache)

//output: 0

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__TSDBLevelFileIndex__"2.8 TSDB Engine SYMBOL Base Cache

When distributed tables in TSDB contain columns of SYMBOL type, their SYMBOL base mappings are cached during read and write operations.

Before versions 2.00.13 / 3.00.1, a background thread scanned all cached SYMBOL bases every 30 seconds and evicted those that met both of the following criteria:

- It has not been accessed for over 5 minutes.

- The associated data is neither in the cache engine nor involved in any active transaction.

Starting from versions 2.00.13 / 3.00.1, the TSDB SYMBOL base cache eviction mechanism was enhanced to use an LRU strategy, considering both cache size and age. Cache size is controlled by the configuration parameter TSDBCachedSymbolBaseCapacity, while eviction timing is governed by TSDBSymbolBaseEvictTime.

As of these versions, both clearAllCache and

clearAllTSDBSymbolBaseCache functions can clear all cached

SYMBOL base entries that are absent from both the cache engine and ongoing

transactions.

Example 11. Clearing TSDB SYMBOL base cache using clearAllCache

function.

dbName = "dfs://test"

if(existsDatabase(dbName)){

dropDatabase(dbName)

}

t = table(1..10 as x, symbol(string(1..10)) as y)

db = database(dbName, VALUE, 1..10,,"TSDB")

dt1 = createTable(db, t, `dt1,,"x").append!(t)

dt2 = createTable(db, t, `dt2,,"x").append!(t)

dt3 = createTable(db, t, `dt3,,"x").append!(t)

pt1 = createPartitionedTable(db, t, `pt1, `x,,"x").append!(t)

pt2 = createPartitionedTable(db, t, `pt2, `x,,"x").append!(t)

pt3 = createPartitionedTable(db, t, `pt3, `x,,"x").append!(t)

//output: 438

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__TSDBCachedSymbolBase__"

pnodeRun(flushTSDBCache)

sleep(61000)

pnodeRun(clearAllCache)

//output: 0

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__TSDBCachedSymbolBase__"

2.9 IOTDB Storage Engine Static Table Cache

The IOTDB Database uses static tables to map all sortColumns (the column(s) used to sort the ingested data within each partition)—except the last column. The maximum cache size for these static tables is specified by the IOTDBStaticTableCacheSize parameter, which defaults to 5% of maxMemSize.

Static table caching follows the same eviction logic as SYMBOL base. The system clears static table entries that meet both of the following conditions:

- They haven’t been accessed for a period (checked every 30 seconds).

- Their corresponding data is neither in the cache engine nor involved in any active transaction.

You can also manually clear static table cache using the

clearAllIOTDBStaticTableCache function.

Example 12. Manually clearing static table cache with

clearAllIOTDBStaticTableCache.

if(existsDatabase('dfs://test')){

dropDatabase('dfs://test')

}

db1 = database(, partitionType=VALUE, partitionScheme=1..100)

db2 = database(, partitionType=VALUE, partitionScheme=2018.08.07..2018.08.11)

db = database('dfs://test', COMPO, [db1, db2], engine='IOTDB')

create table 'dfs://test'.'pt'(

id INT,

ticket SYMBOL,

id2 LONG,

ts TIMESTAMP,

id3 IOTANY

)

partitioned by id, ts,

sortColumns=[`ticket, `id2, `ts],

sortKeyMappingFunction=[hashBucket{, 50}, hashBucket{, 50}],

latestKeyCache=true

pt = loadTable('dfs://test', 'pt')

n=10000

idv=take(1..100, n)

ticketv=take(`adsfe`basdfewf`csdffeaefast4et4eadf join NULL, n)

id2v=take(-2 3 4 5 NULL 2, n)

tsv=take(2018.08.07..2018.08.11, n)

id3v = take(string(1..1000000), n)

t = table(idv as id, ticketv as ticket, id2v as id2, tsv as ts, id3v as id3)

pt.append!(t)

//output: 21600

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__IOTDBStaticTableCache__"

pnodeRun(clearAllIOTDBStaticTableCache)

//output: 0

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__IOTDBStaticTableCache__"

2.10 IOTDB Latest Value Table Cache

The IOTDB storage engine maintains a partitioned in-memory table as the latest

value cache. This cache is updated in real time and used as the primary lookup

source for the latest values, avoiding disk access. This feature can be enabled

via the latestKeyCache parameter in the CREATE statement or

createPartitionedTable function. Its maximum cache size is

specified by the IOTDBLatestKeyCacheSize parameter, which defaults to 5%

of maxMemSize.

This cache uses the same eviction policy as the SYMBOL base cache. A partition’s latest value table is evicted when both of the following conditions are met:

- It hasn’t been accessed for a certain period (evaluated every 30 seconds).

- Its corresponding data is neither present in the cache engine nor involved in any ongoing transaction.

You can also clear this cache using the

clearAllIOTDBLatestKeyCache function.

Note: If a partition still holds data in the cache engine, its latest value table will remain in memory and won't be cleared.

Example 13. Manually clearing latest value table cache using

clearAllIOTDBLatestKeyCache.

if(existsDatabase('dfs://test')){

dropDatabase('dfs://test')

}

db1 = database(, partitionType=VALUE, partitionScheme=1..100)

db2 = database(, partitionType=VALUE, partitionScheme=2018.08.07..2018.08.11)

db = database('dfs://test', COMPO, [db1, db2], engine='IOTDB')

// Set latestKeyCache=true to enable the latest value cache.

create table 'dfs://test'.'pt'(

id INT,

ticket SYMBOL,

id2 LONG,

ts TIMESTAMP,

id3 IOTANY

)

partitioned by id, ts,

sortColumns=[`ticket, `id2, `ts],

sortKeyMappingFunction=[hashBucket{, 50}, hashBucket{, 50}],

latestKeyCache=true

pt = loadTable('dfs://test', 'pt')

n=10000

idv=take(1..100, n)

ticketv=take(`adsfe`basdfewf`csdffeaefast4et4eadf join NULL, n)

id2v=take(-2 3 4 5 NULL 2, n)

tsv=take(2018.08.07..2018.08.11, n)

id3v = take(string(1..1000000), n)

t = table(idv as id, ticketv as ticket, id2v as id2, tsv as ts, id3v as id3)

pt.append!(t)

//output: 55900

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__IOTDBLatestKeyCache__"

// Use clearAllIOTDBLatestKeyCache to clear the latest value cache.

pnodeRun(flushTSDBCache)

pnodeRun(clearAllIOTDBLatestKeyCache)

//output: 0

exec memSize from pnodeRun(getSessionMemoryStat) where userId="__IOTDBLatestKeyCache__"

2.11 Message Queues for Streaming Data Processing

DolphinDB provides a persistent queue and message-sending queue on the stream publisher node, and a message-receiving queue on the subscriber nodes.

As the streaming data enter the stream processing system, they are first appended to the stream table on the publisher side, then to the persistent queue and the sending queue (StreamingPubQueue). Assuming the asynchronous persistence is enabled, data in the persistent queue will be written to disk asynchronously; data in the sending queue (StreamingSubQueue) will be sent to the receiving queue on the subscriber nodes, awaiting the specified handler to fetch it for further processing.

- StreamingPubQueue is the message queue on the publisher node that temporarily stores messages to be sent to subscriber nodes. Its maximum depth is specified by the maxPubQueueDepthPerSite parameter, with a default value of 10,000,000. When the network is congested, data may pile up in the queue.

- StreamingSubQueue is the message queue on the subscriber node that receives messages from the publisher node. Its maximum depth is specified by the maxSubQueueDepth parameter, with a default value of 10,000,000. If the speed the handler processes messages is slower than the speed of data ingestion, data may pile up in the queue.

These queues are not subject to automatic eviction; memory is only released when messages are consumed.

3. Summary

DolphinDB provides various types of caches across its database engines. These caches can be managed and cleared through corresponding functions or configuration settings. The table below summarizes the types of caches and their respective cleanup methods:

Table 3-1. Summary of Cache Management in DolphinDB

| Cache Type | Description | Cleanup Methods |

|---|---|---|

| __DimensionalTable__ | the cache of dimension tables (in Bytes) |

|

| __SharedTable__ | the cache of shared tables (in Bytes) | undef function |

| __OLAPTablet__ | the cache of the OLAP databases (in Bytes) |

|

| __OLAPCacheEngine__ | the memory usage of OLAP cache engine (in Bytes) |

|

| __OLAPCachedSymbolBase__ | the cache of SYMBOL base in OLAP engine (in Bytes) | Automatically managed by background threads |

| __DFSMetadata__ | the memory usage of DFS metadata in distributed storage (in Bytes) | Not a cache—no manual cleanup needed |

| __TSDBCacheEngine__ | the cache of the TSDB engine (in Bytes) |

|

| __TSDBLevelFileIndex__ | the cache of level file index in the TSDB engine (in Bytes) |

|

| __TSDBCachedSymbolBase__ | the cache of SYMBOL base in TSDB engine (in Bytes) |

|

| __IOTDBStaticTableCache__ | the cache of static tables in the IOTDB engine (in Bytes) |

|

| __IOTDBLatestKeyCache__ | the cache of latest value tables in the IOTDB engine (in Bytes) |

|

| __StreamingPubQueue__ | the maximum depth (number of records) of a message queue on the publisher node | Automatically released after consumption; no manual cleanup. |

| __StreamingSubQueue__ | the maximum depth (number of records) of a message queue on the subscriber node | Automatically released after consumption; no manual cleanup. |